OpenCV – Overview

OpenCV is a cross-platform library using which we can develop real-time computer vision applications. It mainly focuses on image processing, video capture and analysis including features like face detection and object detection.

Let’s start the chapter by defining the term “Computer Vision”.

Computer Vision

Computer Vision can be defined as a discipline that explains how to reconstruct, interrupt, and understand a 3D scene from its 2D images, in terms of the properties of the structure present in the scene. It deals with modeling and replicating human vision using computer software and hardware.

Computer Vision overlaps significantly with the following fields −

-

Image Processing − It focuses on image manipulation.

-

Pattern Recognition − It explains various techniques to classify patterns.

-

Photogrammetry − It is concerned with obtaining accurate measurements from images.

Computer Vision Vs Image Processing

Image processing deals with image-to-image transformation. The input and output of image processing are both images.

Computer vision is the construction of explicit, meaningful descriptions of physical objects from their image. The output of computer vision is a description or an interpretation of structures in 3D scene.

Applications of Computer Vision

Here we have listed down some of major domains where Computer Vision is heavily used.

Robotics Application

-

Localization − Determine robot location automatically

-

Navigation

-

Obstacles avoidance

-

Assembly (peg-in-hole, welding, painting)

-

Manipulation (e.g. PUMA robot manipulator)

-

Human Robot Interaction (HRI) − Intelligent robotics to interact with and serve people

Medicine Application

- Classification and detection (e.g. lesion or cells classification and tumor detection)

- 2D/3D segmentation

- 3D human organ reconstruction (MRI or ultrasound)

- Vision-guided robotics surgery

Industrial Automation Application

- Industrial inspection (defect detection)

- Assembly

- Barcode and package label reading

- Object sorting

- Document understanding (e.g. OCR)

Security Application

-

Biometrics (iris, finger print, face recognition)

-

Surveillance − Detecting certain suspicious activities or behaviors

Transportation Application

- Autonomous vehicle

- Safety, e.g., driver vigilance monitoring

Features of OpenCV Library

Using OpenCV library, you can −

-

Read and write images

-

Capture and save videos

-

Process images (filter, transform)

-

Perform feature detection

-

Detect specific objects such as faces, eyes, cars, in the videos or images.

-

Analyze the video, i.e., estimate the motion in it, subtract the background, and track objects in it.

OpenCV was originally developed in C++. In addition to it, Python and Java bindings were provided. OpenCV runs on various Operating Systems such as windows, Linux, OSx, FreeBSD, Net BSD, Open BSD, etc.

This tutorial explains the concepts of OpenCV with examples using Java bindings.

OpenCV Library Modules

Following are the main library modules of the OpenCV library.

Core Functionality

This module covers the basic data structures such as Scalar, Point, Range, etc., that are used to build OpenCV applications. In addition to these, it also includes the multidimensional array Mat, which is used to store the images. In the Java library of OpenCV, this module is included as a package with the name org.opencv.core.

Image Processing

This module covers various image processing operations such as image filtering, geometrical image transformations, color space conversion, histograms, etc. In the Java library of OpenCV, this module is included as a package with the name org.opencv.imgproc.

Video

This module covers the video analysis concepts such as motion estimation, background subtraction, and object tracking. In the Java library of OpenCV, this module is included as a package with the name org.opencv.video.

Video I/O

This module explains the video capturing and video codecs using OpenCV library. In the Java library of OpenCV, this module is included as a package with the name org.opencv.videoio.

calib3d

This module includes algorithms regarding basic multiple-view geometry algorithms, single and stereo camera calibration, object pose estimation, stereo correspondence and elements of 3D reconstruction. In the Java library of OpenCV, this module is included as a package with the name org.opencv.calib3d.

features2d

This module includes the concepts of feature detection and description. In the Java library of OpenCV, this module is included as a package with the name org.opencv.features2d.

Objdetect

This module includes the detection of objects and instances of the predefined classes such as faces, eyes, mugs, people, cars, etc. In the Java library of OpenCV, this module is included as a package with the name org.opencv.objdetect.

Highgui

This is an easy-to-use interface with simple UI capabilities. In the Java library of OpenCV, the features of this module is included in two different packages namely, org.opencv.imgcodecs and org.opencv.videoio.

A Brief History of OpenCV

OpenCV was initially an Intel research initiative to advise CPU-intensive applications. It was officially launched in 1999.

- In the year 2006, its first major version, OpenCV 1.0 was released.

- In October 2009, the second major version, OpenCV 2 was released.

- In August 2012, OpenCV was taken by a nonprofit organization OpenCV.org.

OpenCV – Environment

In this chapter, you will learn how to install OpenCV and set up its environment in your system.

Installing OpenCV

First of all, you need to download OpenCV onto your system. Follow the steps given below.

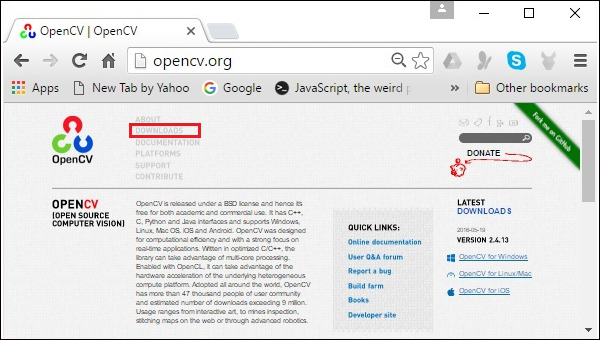

Step 1 − Open the homepage of OpenCV by clicking the following link: On clicking, you will see its homepage as shown below.

Step 2 − Now, click the Downloads link highlighted in the above screenshot. On clicking, you will be directed to the downloads page of OpenCV.

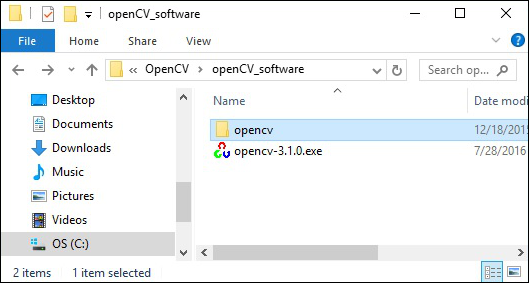

Step 3 − On clicking the highlighted link in the above screenshot, a file named opencv-3.1.0.exe will be downloaded. Extract this file to generate a folder opencv in your system, as shown in the following screenshot.

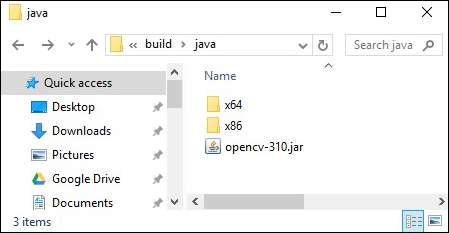

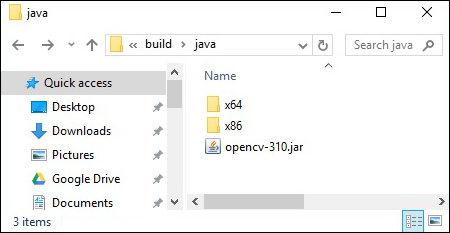

Step 4 − Open the folder OpenCV → build → java. Here you will find the jar file of OpenCV named opencv-310.jar. Save this file in a separate folder for further use.

Eclipse Installation

After downloading the required JAR files, you have to embed these JAR files to your Eclipse environment. You can do this by setting the Build Path to these JAR files and by using pom.xml.

Setting Build Path

Following are the steps to set up OpenCV in Eclipse −

Step 1 − Ensure that you have installed Eclipse in your system. If not, download and install Eclipse in your system.

Step 2 − Open Eclipse, click on File, New, and Open a new project as shown in the following screenshot.

Step 3 − On selecting the project, you will get the New Project wizard. In this wizard, select Java project and proceed by clicking the Next button, as shown in the following screenshot.

Step 4 − On proceeding forward, you will be directed to the New Java Project wizard. Create a new project and click Next, as shown in the following screenshot.

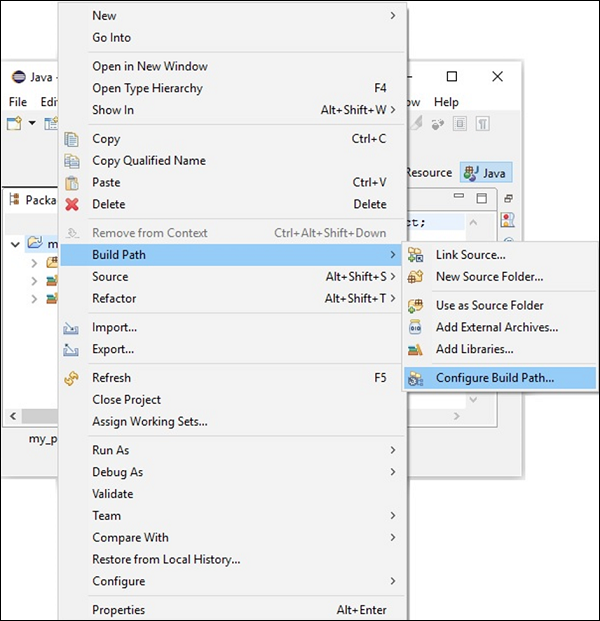

Step 5 − After creating a new project, right-click on it. Select Build Path and click Configure Build Path… as shown in the following screenshot.

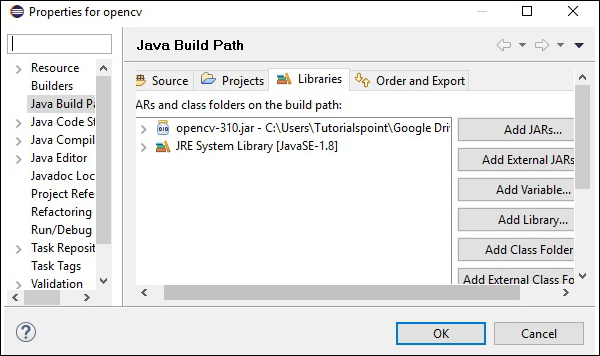

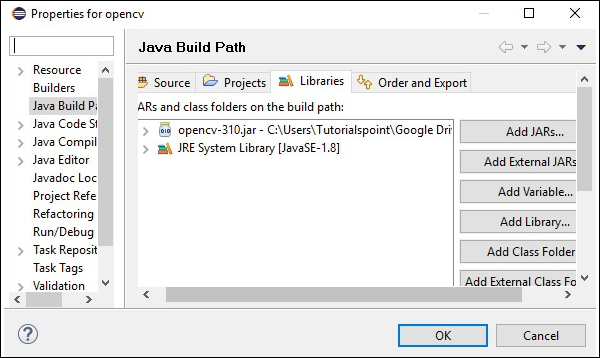

Step 6 − On clicking the Build Path option, you will be directed to the Java Build Path wizard. Click the Add External JARs button, as shown in the following screenshot.

Step 7 − Select the path where you have saved the file opencv-310.jar.

Step 8 − On clicking the Open button in the above screenshot, those files will be added to your library.

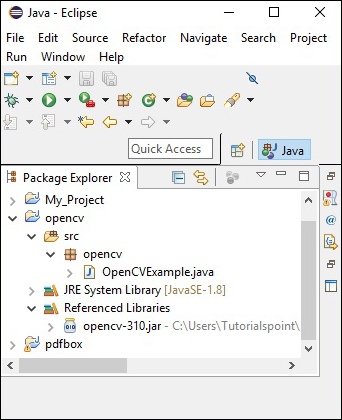

Step 9 − On clicking OK, you will successfully add the required JAR files to the current project and you can verify these added libraries by expanding the Referenced Libraries.

Setting the Path for Native Libraries

In addition to the JAR files, you need to set path for the native libraries (DLL files) of OpenCV.

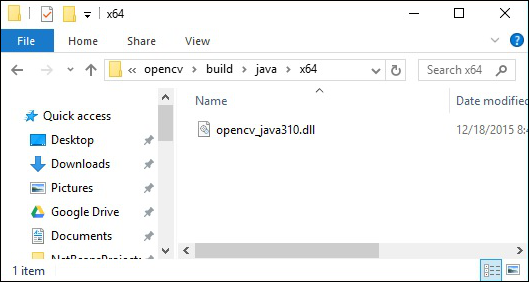

Location of DLL files − Open the installation folder of OpenCV and go to the sub-folder build → java. Here you will find the two folders x64 (64 bit) and x86 (32 bit) which contain the dll files of OpenCV.

Open the respective folder suitable for your operating system, then you can see the dll file, as shown in the following screenshot.

Now, set the path for this file too by following the steps given below −

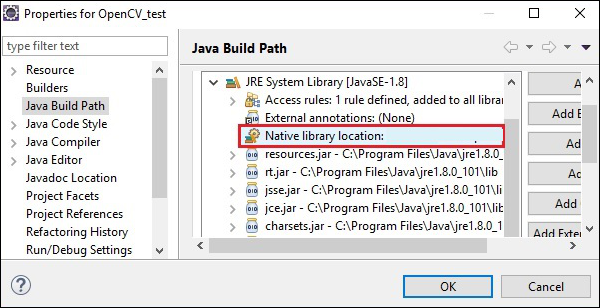

Step 1 − Once again, open the JavaBuildPath window. Here you can observe the added JAR file and the JRE System Library.

Step 2 − On expanding it, you will get the system libraries and Native library location, as highlighted in the following screenshot.

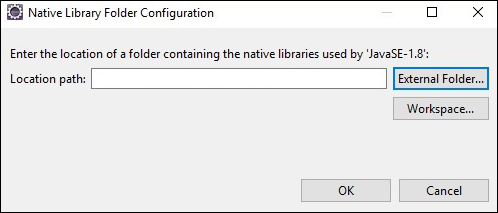

Step 3 − Double-click on the Native library location. Here, you can see the Native Library Folder Configuration window as shown below.

Here, click the button External Folder… and select the location of the dll file in your system.

OpenCV – Storing Images

To capture an image, we use devices like cameras and scanners. These devices record numerical values of the image (Ex: pixel values). OpenCV is a library which processes the digital images, therefore we need to store these images for processing.

The Mat class of OpenCV library is used to store the values of an image. It represents an n-dimensional array and is used to store image data of grayscale or color images, voxel volumes, vector fields, point clouds, tensors, histograms, etc.

This class comprises of two data parts: the header and a pointer

-

Header − Contains information like size, method used for storing, and the address of the matrix (constant in size).

-

Pointer − Stores the pixel values of the image (Keeps on varying).

The Mat Class

The OpenCV Java library provides this class with the same name (Mat) within the package org.opencv.core.

Constructors

The Mat class of OpenCV Java library has various constructors, using which you can construct the Mat object.

| S.No | Constructors and Description |

|---|---|

| 1 |

Mat() This is the default constructor with no parameters in most cases. We use this to constructor to create an empty matrix and pass this to other OpenCV methods. |

| 2 |

Mat(int rows, int cols, int type) This constructor accepts three parameters of integer type representing the number of rows and columns in a 2D array and the type of the array (that is to be used to store data). |

| 3 |

Mat(int rows, int cols, int type, Scalar s) Including the parameters of the previous one, this constructor additionally accepts an object of the class Scalar as parameter. |

| 4 |

Mat(Size size, int type) This constructor accepts two parameters, an object representing the size of the matrix and an integer representing the type of the array used to store the data. |

| 5 |

Mat(Size size, int type, Scalar s) Including the parameters of the previous one, this constructor additionally accepts an object of the class Scalar as parameter. |

| 6 |

Mat(long addr) |

| 7 |

Mat(Mat m, Range rowRange) This constructor accepts an object of another matrix and an object of the class Range representing the range of the rows to be taken to create a new matrix. |

| 8 |

Mat(Mat m, Range rowRange, Range colRange) Including the parameters of the previous one, this constructor additionally accepts an object of the class. Range representing the column range. |

| 9 |

Mat(Mat m, Rect roi) This constructor accepts two objects, one representing another matrix and the other representing the Region Of Interest. |

Note −

-

Array type. Use CV_8UC1, …, CV_64FC4 to create 1-4 channel matrices, or CV_8UC(n), …, CV_64FC(n) to create multi-channel (up to CV_CN_MAX channels) matrices.

-

The type of the matrices were represented by various fields of the class CvType which belongs to the package org.opencv.core.

Methods and Description

Following are some of the methods provided by the Mat class.

| S.No | Methods and Description |

|---|---|

| 1 |

Mat col(int x) This method accepts an integer parameter representing the index of a column and retrieves and returns that column. |

| 2 |

Mat row(int y) This method accepts an integer parameter representing the index of a row and retrieves and returns that row. |

| 3 |

int cols() This method returns the number of columns in the matrix. |

| 4 |

int rows() This method returns the number of rows in the matrix. |

| 5 |

Mat setTo(Mat value) This method accepts an object of the Mat type and sets the array elements to the specified value. |

| 6 |

Mat setTo(Scalar s) This method accepts an object of the Scalar type and sets the array elements to the specified value. |

Creating and Displaying the Matrix

In this section, we are going to discuss our first OpenCV example. We will see how to create and display a simple OpenCV matrix.

Given below are the steps to be followed to create and display a matrix in OpenCV.

Step 1: Load the OpenCV native library

While writing Java code using OpenCV library, the first step you need to do is to load the native library of OpenCV using the loadLibrary(). Load the OpenCV native library as shown below.

//Loading the core library System.loadLibrary(Core.NATIVE_LIBRARY_NAME);

Step 2: Instantiate the Mat class

Instantiate the Mat class using any of the functions mentioned in this chapter earlier.

//Creating a matrix Mat matrix = new Mat(5, 5, CvType.CV_8UC1, new Scalar(0));

Step 3: Fill the matrix using the methods

You can retrieve particular rows/columns of a matrix by passing index values to the methods row()/col().

And, you can set values to these using any of the variants of the setTo() methods.

//Retrieving the row with index 0

Mat row0 = matrix.row(0);

//setting values of all elements in the row with index 0

row0.setTo(new Scalar(1));

//Retrieving the row with index 3

Mat col3 = matrix.col(3);

//setting values of all elements in the row with index 3

col3.setTo(new Scalar(3));

Example

You can use the following program code to create and display a simple matrix in Java using OpenCV library.

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.core.CvType;

import org.opencv.core.Scalar;

class DisplayingMatrix {

public static void main(String[] args) {

//Loading the core library

System.loadLibrary(Core.NATIVE_LIBRARY_NAME);

//Creating a matrix

Mat matrix = new Mat(5, 5, CvType.CV_8UC1, new Scalar(0));

//Retrieving the row with index 0

Mat row0 = matrix.row(0);

//setting values of all elements in the row with index 0

row0.setTo(new Scalar(1));

//Retrieving the row with index 3

Mat col3 = matrix.col(3);

//setting values of all elements in the row with index 3

col3.setTo(new Scalar(3));

//Printing the matrix

System.out.println("OpenCV Mat data:n" + matrix.dump());

}

}

On executing the above program, you will get the following output −

OpenCV Mat data: [ 1, 1, 1, 3, 1; 0, 0, 0, 3, 0; 0, 0, 0, 3, 0; 0, 0, 0, 3, 0; 0, 0, 0, 3, 0]

Loading Image using JavaSE API

The BufferedImage class of the java.awt.image.BufferedImage package is used to store an image and the ImageIO class of the package import javax.imageio provides methods to read and write Images.

Example

You can use the following program code to load and save images using JavaSE library.

import java.awt.image.BufferedImage;

import java.io.File;

import java.io.IOException;

import javax.imageio.ImageIO;

public class LoadingImage_JSE_library {

public static void main( String[] args ) throws IOException {

//Input File

File input = new File("C:/EXAMPLES/OpenCV/sample.jpg");

//Reading the image

BufferedImage image = ImageIO.read(input);

//Saving the image with a different name

File ouptut = new File("C:/OpenCV/sample.jpg");

ImageIO.write(image, "jpg", ouptut);

System.out.println("image Saved");

}

}

On executing the above program, you will get the following output −

image Saved

If you open the specified path, you can observe the saved image as follows −

OpenCV – Reading Images

The Imgcodecs class of the package org.opencv.imgcodecs provides methods to read and write images. Using OpenCV, you can read an image and store it in a matrix (perform transformations on the matrix if needed). Later, you can write the processed matrix to a file.

The read() method of the Imgcodecs class is used to read an image using OpenCV. Following is the syntax of this method.

imread(filename)

It accepts an argument (filename), a variable of the String type representing the path of the file that is to be read.

Given below are the steps to be followed to read images in Java using OpenCV library.

Step 1: Load the OpenCV native library

Load the OpenCV native library using the load() method, as shown below.

//Loading the core library System.loadLibrary(Core.NATIVE_LIBRARY_NAME);

Step 2: Instantiate the Imgcodecs class

Instantiate the Imgcodecs class.

//Instantiating the Imgcodecs class Imgcodecs imageCodecs = new Imgcodecs();

Step 3: Reading the image

Read the image using the method imread(). This method accepts a string argument representing the path of the image and returns the image read as Mat object.

//Reading the Image from the file Mat matrix = imageCodecs.imread(Path of the image);

Example

The following program code shows how you can read an image using OpenCV library.

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.imgcodecs.Imgcodecs;

public class ReadingImages {

public static void main(String args[]) {

//Loading the OpenCV core library

System.loadLibrary( Core.NATIVE_LIBRARY_NAME );

//Instantiating the Imagecodecs class

Imgcodecs imageCodecs = new Imgcodecs();

//Reading the Image from the file

String file ="C:/EXAMPLES/OpenCV/sample.jpg";

Mat matrix = imageCodecs.imread(file);

System.out.println("Image Loaded");

}

}

On executing the above program, OpenCV loads the specified image and displays the following output −

Image Loaded

OpenCV – Writing an Image

The write() method of the Imgcodecs class is used to write an image using OpenCV. To write an image, repeat the first three steps from the previous example.

To write an image, you need to invoke the imwrite() method of the Imgcodecs class.

Following is the syntax of this method.

imwrite(filename, mat)

This method accepts the following parameters −

-

filename − A String variable representing the path where to save the file.

-

mat − A Mat object representing the image to be written.

Example

Following program is an example to write an image using Java program using OpenCV library.

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.imgcodecs.Imgcodecs;

public class WritingImages {

public static void main(String args[]) {

//Loading the OpenCV core library

System.loadLibrary(Core.NATIVE_LIBRARY_NAME);

//Instantiating the imagecodecs class

Imgcodecs imageCodecs = new Imgcodecs();

//Reading the Image from the file and storing it in to a Matrix object

String file ="C:/EXAMPLES/OpenCV/sample.jpg";

Mat matrix = imageCodecs.imread(file);

System.out.println("Image Loaded ..........");

String file2 = "C:/EXAMPLES/OpenCV/sample_resaved.jpg";

//Writing the image

imageCodecs.imwrite(file2, matrix);

System.out.println("Image Saved ............");

}

}

On executing the above program, you will get the following output −

Image Loaded .......... Image Saved ...........

If you open the specified path, you can observe the saved image as shown below −

OpenCV – GUI

In the earlier chapters, we have discussed how to read and save an image using OpenCV Java library. In addition to it, we can also display the loaded images in a separate window using GUI libraries such as AWT/Swings and JavaFX.

Converting Mat to Buffered Image

To read an image we use the method imread(). This method returns the image read in the form of Matrix. But, to use this image with GUI libraries (AWT/Swings and JavaFX), it should be converted as an object of the class BufferedImage of the package java.awt.image.BufferedImage.

Following are the steps to convert a Mat object of OpenCV to BufferedImage object.

Step 1: encode the Mat to MatOfByte

First of all, you need to convert the matrix to matrix of byte. You can do it using the method imencode() of the class Imgcodecs. Following is the syntax of this method.

imencode(ext, image, matOfByte);

This method accepts the following parameters −

-

Ext − A String parameter specifying the image format (.jpg, .png, etc.)

-

image − A Mat object of the image

-

matOfByte − An empty object of the class MatOfByte

Encode the image using this method as shown below.

//Reading the image

Mat image = Imgcodecs.imread(file);

//instantiating an empty MatOfByte class

MatOfByte matOfByte = new MatOfByte();

//Converting the Mat object to MatOfByte

Imgcodecs.imencode(".jpg", image, matOfByte);

Step 2: Convert the MatOfByte object to byte array

Convert the MatOfByte object into a byte array using the method toArray().

byte[] byteArray = matOfByte.toArray();

Step 3: Preparing the InputStream object

Prepare the InputStream object by passing the byte array created in the previous step to the constructor of the ByteArrayInputStream class.

//Preparing the InputStream object InputStream in = new ByteArrayInputStream(byteArray);

Step 4: Preparing the InputStream object

Pass the Input Stream object created in the previous step to the read() method of the ImageIO class. This will return a BufferedImage object.

//Preparing the BufferedImage BufferedImage bufImage = ImageIO.read(in);

Displaying Image using AWT/Swings

To display an image using the AWT/Swings frame, first of all, read an image using the imread() method and convert it into BufferedImage following the above-mentioned steps.

Then, instantiate the JFrame class and add the buffered image created to the ContentPane of the JFrame, as shown below −

//Instantiate JFrame JFrame frame = new JFrame(); //Set Content to the JFrame frame.getContentPane().add(new JLabel(new ImageIcon(bufImage))); frame.pack(); frame.setVisible(true);

Example

The following program code shows how you can read an image and display it through swing window using OpenCV library.

import java.awt.image.BufferedImage;

import java.io.ByteArrayInputStream;

import java.io.InputStream;

import javax.imageio.ImageIO;

import javax.swing.ImageIcon;

import javax.swing.JFrame;

import javax.swing.JLabel;

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.core.MatOfByte;

import org.opencv.imgcodecs.Imgcodecs;

public class DisplayingImagesUsingSwings {

public static void main(String args[]) throws Exception {

//Loading the OpenCV core library

System.loadLibrary( Core.NATIVE_LIBRARY_NAME );

//Reading the Image from the file and storing it in to a Matrix object

String file = "C:/EXAMPLES/OpenCV/sample.jpg";

Mat image = Imgcodecs.imread(file);

//Encoding the image

MatOfByte matOfByte = new MatOfByte();

Imgcodecs.imencode(".jpg", image, matOfByte);

//Storing the encoded Mat in a byte array

byte[] byteArray = matOfByte.toArray();

//Preparing the Buffered Image

InputStream in = new ByteArrayInputStream(byteArray);

BufferedImage bufImage = ImageIO.read(in);

//Instantiate JFrame

JFrame frame = new JFrame();

//Set Content to the JFrame

frame.getContentPane().add(new JLabel(new ImageIcon(bufImage)));

frame.pack();

frame.setVisible(true);

System.out.println("Image Loaded");

}

}

On executing the above program, you will get the following output −

Image Loaded

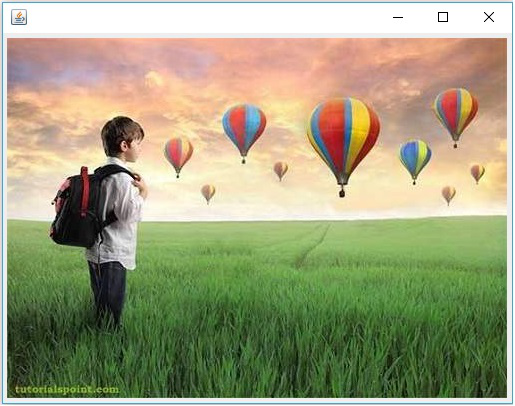

In addition to that, you can see a window displaying the image loaded, as follows −

Displaying Image using JavaFX

To display an image using JavaFX, first of all, read an image using the imread() method and convert it into BufferedImage. Then, convert the BufferedImage to WritableImage, as shown below.

WritableImage writableImage = SwingFXUtils.toFXImage(bufImage, null);

Pass this WritableImage object to the constructor of the ImageView class.

ImageView imageView = new ImageView(writableImage);

Example

The following program code shows how to read an image and display it through JavaFX window using OpenCV library.

import java.awt.image.BufferedImage;

import java.io.ByteArrayInputStream;

import java.io.IOException;

import java.io.InputStream;

import javafx.application.Application;

import javafx.embed.swing.SwingFXUtils;

import javafx.scene.Group;

import javafx.scene.Scene;

import javafx.scene.image.ImageView;

import javafx.scene.image.WritableImage;

import javafx.stage.Stage;

import javax.imageio.ImageIO;

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.core.MatOfByte;

import org.opencv.imgcodecs.Imgcodecs;

public class DisplayingImagesJavaFX extends Application {

@Override

public void start(Stage stage) throws IOException {

WritableImage writableImage = loadImage();

//Setting the image view

ImageView imageView = new ImageView(writableImage);

//Setting the position of the image

imageView.setX(50);

imageView.setY(25);

//setting the fit height and width of the image view

imageView.setFitHeight(400);

imageView.setFitWidth(500);

//Setting the preserve ratio of the image view

imageView.setPreserveRatio(true);

//Creating a Group object

Group root = new Group(imageView);

//Creating a scene object

Scene scene = new Scene(root, 600, 400);

//Setting title to the Stage

stage.setTitle("Loading an image");

//Adding scene to the stage

stage.setScene(scene);

//Displaying the contents of the stage

stage.show();

}

public WritableImage loadImage() throws IOException {

//Loading the OpenCV core library

System.loadLibrary( Core.NATIVE_LIBRARY_NAME );

//Reading the Image from the file and storing it in to a Matrix object

String file ="C:/EXAMPLES/OpenCV/sample.jpg";

Mat image = Imgcodecs.imread(file);

//Encoding the image

MatOfByte matOfByte = new MatOfByte();

Imgcodecs.imencode(".jpg", image, matOfByte);

//Storing the encoded Mat in a byte array

byte[] byteArray = matOfByte.toArray();

//Displaying the image

InputStream in = new ByteArrayInputStream(byteArray);

BufferedImage bufImage = ImageIO.read(in);

System.out.println("Image Loaded");

WritableImage writableImage = SwingFXUtils.toFXImage(bufImage, null);

return writableImage;

}

public static void main(String args[]) {

launch(args);

}

}

On executing the above program, you will get the following output −

Image Loaded

In addition to that, you can see a window displaying the image loaded, as follows −

OpenCV – The IMREAD_XXX Flag

OpenCV supports various types of images such as colored, binary, grayscale, etc. Using the imread() method and predefined fields of the Imgcodecs class, you can read a given image as another type.

The flags parameter of imread() method (IMREAD_XXX)

In the earlier chapters, we have seen the syntax of imread() method of the Imgcodecs class. It accepts a string argument representing the location of the image that is to be read.

imread(filename)

The imread() method has another syntax.

imread(filename, int flags)

This syntax accepts two parameters −

-

filename − It accepts an argument (filename), a variable of the String type representing the path of the file that is to be read.

-

flags − An integer value representing a predefined flag value. For each value, this reads the given image as a specific type (gray scale color etc.)

Following is the table listing various fields provided in the Imgproc class as values for this parameter.

| S.No | Fields and Description |

|---|---|

| 1 |

IMREAD_COLOR If the flag is set to this value, the loaded image will be converted to a 3-channel BGR (Blue Green Red) color image. |

| 2 |

IMREAD_GRAYSCALE If the flag is set to this value, the loaded image will be converted to a single-channel grayscale image. |

| 3 |

IMREAD_LOAD_GDAL If the flag is set to this value, you can load the image using the gdal driver. |

| 4 |

IMREAD_ANYCOLOR If the flag is set to this value, the image is read in any possible color format. |

| 5 |

IMREAD_REDUCED_COLOR_2 IMREAD_REDUCED_COLOR_4 IMREAD_REDUCED_COLOR_8 If the flag is set to this value, the image is read as three-channel BGR, and the size of the image is reduced to ½, ¼th or ⅛th of the original size of the image with respect to the field used. |

| 6 |

IMREAD_REDUCED_GRAYSCALE_2 IMREAD_REDUCED_GRAYSCALE_4 IMREAD_REDUCED_GRAYSCALE_8 If the flag is set to this value, the image is read as a single-channel grayscale image, and the size of the image is reduced to ½, ¼th or ⅛th of the original size of the image with respect to the field used. |

| 7 |

IMREAD_UNCHANGED If the flag is set to this value, the loaded image is returned as it is. |

OpenCV – Reading an Image as Grayscale

The following program demonstrates how to read a colored image as grayscale and display it using JavaFX window. In here, we have read the image by passing the flag IMREAD_GRAYSCALE along with the String holding the path of a colored image.

import java.awt.image.BufferedImage;

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.imgcodecs.Imgcodecs;

import javafx.application.Application;

import javafx.embed.swing.SwingFXUtils;

import javafx.scene.Group;

import javafx.scene.Scene;

import javafx.scene.image.ImageView;

import javafx.scene.image.WritableImage;

import javafx.stage.Stage;

public class ReadingAsGrayscale extends Application {

@Override

public void start(Stage stage) throws Exception {

WritableImage writableImage = loadAndConvert();

// Setting the image view

ImageView imageView = new ImageView(writableImage);

// Setting the position of the image

imageView.setX(10);

imageView.setY(10);

// setting the fit height and width of the image view

imageView.setFitHeight(400);

imageView.setFitWidth(600);

// Setting the preserve ratio of the image view

imageView.setPreserveRatio(true);

// Creating a Group object

Group root = new Group(imageView);

// Creating a scene object

Scene scene = new Scene(root, 600, 400);

// Setting title to the Stage

stage.setTitle("Reading image as grayscale");

// Adding scene to the stage

stage.setScene(scene);

// Displaying the contents of the stage

stage.show();

}

public WritableImage loadAndConvert() throws Exception {

// Loading the OpenCV core library

System.loadLibrary( Core.NATIVE_LIBRARY_NAME );

// Instantiating the imagecodecs class

Imgcodecs imageCodecs = new Imgcodecs();

String input = "C:/EXAMPLES/OpenCV/sample.jpg";

// Reading the image

Mat src = imageCodecs.imread(input, Imgcodecs.IMREAD_GRAYSCALE);

byte[] data1 = new byte[src.rows() * src.cols() * (int)(src.elemSize())];

src.get(0, 0, data1);

// Creating the buffered image

BufferedImage bufImage = new BufferedImage(src.cols(),src.rows(),

BufferedImage.TYPE_BYTE_GRAY);

// Setting the data elements to the image

bufImage.getRaster().setDataElements(0, 0, src.cols(), src.rows(), data1);

// Creating a WritableImage

WritableImage writableImage = SwingFXUtils.toFXImage(bufImage, null);

System.out.println("Image Read");

return writableImage;

}

public static void main(String args[]) throws Exception {

launch(args);

}

}

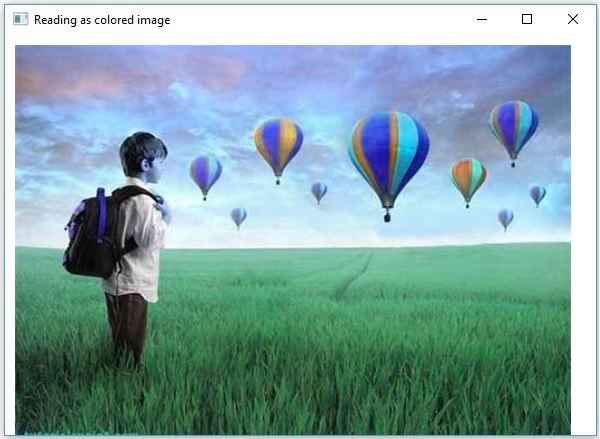

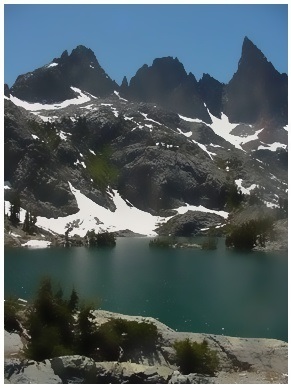

Input Image

Assume that following is the input image sample.jpg specified in the above program.

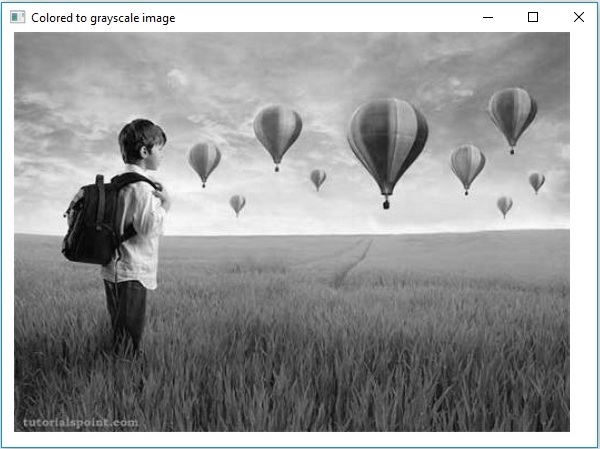

Output Image

On executing the program, you will get the following output.

OpenCV – Reading Image as BGR

The following program demonstrates how to read a colored image as BGR type image and display it using JavaFX window. In here, we have read the image by passing the flag IMREAD_COLOR to the method imread() along with the String holding the path of a colored image.

import java.awt.image.BufferedImage;

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.imgcodecs.Imgcodecs;

import javafx.application.Application;

import javafx.embed.swing.SwingFXUtils;

import javafx.scene.Group;

import javafx.scene.Scene;

import javafx.scene.image.ImageView;

import javafx.scene.image.WritableImage;

import javafx.stage.Stage;

public class ReadingAsColored extends Application {

@Override

public void start(Stage stage) throws Exception {

WritableImage writableImage = loadAndConvert();

// Setting the image view

ImageView imageView = new ImageView(writableImage);

// Setting the position of the image

imageView.setX(10);

imageView.setY(10);

// setting the fit height and width of the image view

imageView.setFitHeight(400);

imageView.setFitWidth(600);

// Setting the preserve ratio of the image view

imageView.setPreserveRatio(true);

// Creating a Group object

Group root = new Group(imageView);

// Creating a scene object

Scene scene = new Scene(root, 600, 400);

// Setting title to the Stage

stage.setTitle("Reading as colored image");

// Adding scene to the stage

stage.setScene(scene);

// Displaying the contents of the stage

stage.show();

}

public WritableImage loadAndConvert() throws Exception {

// Loading the OpenCV core library

System.loadLibrary( Core.NATIVE_LIBRARY_NAME );

String input = "C:/EXAMPLES/OpenCV/sample.jpg";

Mat dst = new Mat();

// Reading the image

Mat src = Imgcodecs.imread(input, Imgcodecs.IMREAD_COLOR);

byte[] data1 = new byte[src.rows() * src.cols() * (int)(src.elemSize())];

src.get(0, 0, data1);

// Creating the buffered image

BufferedImage bufImage = new BufferedImage(src.cols(),src.rows(),

BufferedImage.TYPE_3BYTE_BGR);

// Setting the data elements to the image

bufImage.getRaster().setDataElements(0, 0, src.cols(), src.rows(), data1);

// Creating a WritableImage

WritableImage writableImage = SwingFXUtils.toFXImage(bufImage, null);

System.out.println("Image read");

return writableImage;

}

public static void main(String args[]) throws Exception {

launch(args);

}

}

Input Image

Assume that following is the input image sample.jpg specified in the above program.

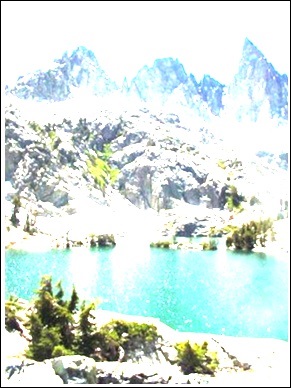

Output Image

On executing the program, you will get the following output.

OpenCV – Colored Images to GrayScale

In the earlier chapters, we discussed how to read an input image as different types (binary, grayscale, BGR, etc.). In this chapter, we will learn how to convert one type of image to another.

The class named Imgproc of the package org.opencv.imgproc provides methods to convert an image from one color to another.

Converting Colored Images to Grayscale

A method named cvtColor() is used to convert colored images to grayscale. Following is the syntax of this method.

cvtColor(Mat src, Mat dst, int code)

This method accepts the following parameters −

-

src − A matrix representing the source.

-

dst − A matrix representing the destination.

-

code − An integer code representing the type of the conversion, for example, RGB to Grayscale.

You can convert colored images to gray scale by passing the code Imgproc.COLOR_RGB2GRAY along with the source and destination matrices as a parameter to the cvtColor() method.

Example

The following program demonstrates how to read a colored image as a grayscale image and display it using JavaFX window.

import java.awt.image.BufferedImage;

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.imgcodecs.Imgcodecs;

import org.opencv.imgproc.Imgproc;

import javafx.application.Application;

import javafx.embed.swing.SwingFXUtils;

import javafx.scene.Group;

import javafx.scene.Scene;

import javafx.scene.image.ImageView;

import javafx.scene.image.WritableImage;

import javafx.stage.Stage;

public class ColorToGrayscale extends Application {

@Override

public void start(Stage stage) throws Exception {

WritableImage writableImage = loadAndConvert();

// Setting the image view

ImageView imageView = new ImageView(writableImage);

// Setting the position of the image

imageView.setX(10);

imageView.setY(10);

// setting the fit height and width of the image view

imageView.setFitHeight(400);

imageView.setFitWidth(600);

// Setting the preserve ratio of the image view

imageView.setPreserveRatio(true);

// Creating a Group object

Group root = new Group(imageView);

// Creating a scene object

Scene scene = new Scene(root, 600, 400);

// Setting title to the Stage

stage.setTitle("Colored to grayscale image");

// Adding scene to the stage

stage.setScene(scene);

// Displaying the contents of the stage

stage.show();

}

public WritableImage loadAndConvert() throws Exception {

//Loading the OpenCV core library

System.loadLibrary( Core.NATIVE_LIBRARY_NAME );

String input = "C:/EXAMPLES/OpenCV/sample.jpg";

//Reading the image

Mat src = Imgcodecs.imread(input);

//Creating the empty destination matrix

Mat dst = new Mat();

//Converting the image to gray sacle and saving it in the dst matrix

Imgproc.cvtColor(src, dst, Imgproc.COLOR_RGB2GRAY);

//Extracting data from the transformed image (dst)

byte[] data1 = new byte[dst.rows() * dst.cols() * (int)(dst.elemSize())];

dst.get(0, 0, data1);

//Creating Buffered image using the data

BufferedImage bufImage = new BufferedImage(dst.cols(),dst.rows(),

BufferedImage.TYPE_BYTE_GRAY);

//Setting the data elements to the image

bufImage.getRaster().setDataElements(0, 0, dst.cols(), dst.rows(), data1);

//Creating a WritableImage

WritableImage writableImage = SwingFXUtils.toFXImage(bufImage, null);

System.out.println("Converted to Grayscale");

return writableImage;

}

public static void main(String args[]) throws Exception {

launch(args);

}

}

Input Image

Assume that following is the input image sample.jpg specified in the above program.

Output Image

On executing the program, you will get the following output.

OpenCV – Colored Image to Binary

A method called threshold() is used to convert grayscale images to binary image. Following is the syntax of this method.

threshold(Mat src, Mat dst, double thresh, double maxval, int type)

This method accepts the following parameters −

-

mat − A Mat object representing the input image.

-

dst − A Mat object representing the output image.

-

thresh − An integer representing the threshold value.

-

maxval − An integer representing the maximum value to use with the THRESH_BINARY and THRESH_BINARY_INV thresholding types.

-

type − An integer code representing the type of the conversion, for example, RGB to Grayscale.

You can convert a grayscale image to binary image by passing the code Imgproc.THRESH_BINARY along with the values to the remaining parameters.

Example

The following program demonstrates how to read a colored image as a binary image and display it using JavaFX window.

import java.awt.image.BufferedImage;

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.imgcodecs.Imgcodecs;

import org.opencv.imgproc.Imgproc;

import javafx.application.Application;

import javafx.embed.swing.SwingFXUtils;

import javafx.scene.Group;

import javafx.scene.Scene;

import javafx.scene.image.ImageView;

import javafx.scene.image.WritableImage;

import javafx.stage.Stage;

public class ColorToBinary extends Application {

@Override

public void start(Stage stage) throws Exception {

WritableImage writableImage = loadAndConvert();

// Setting the image view

ImageView imageView = new ImageView(writableImage);

// Setting the position of the image

imageView.setX(10);

imageView.setY(10);

// setting the fit height and width of the image view

imageView.setFitHeight(400);

imageView.setFitWidth(600);

// Setting the preserve ratio of the image view

imageView.setPreserveRatio(true);

// Creating a Group object

Group root = new Group(imageView);

// Creating a scene object

Scene scene = new Scene(root, 600, 400);

// Setting title to the Stage

stage.setTitle("Loading an image");

// Adding scene to the stage

stage.setScene(scene);

// Displaying the contents of the stage

stage.show();

}

public WritableImage loadAndConvert() throws Exception {

// Loading the OpenCV core library

System.loadLibrary( Core.NATIVE_LIBRARY_NAME );

// Instantiating the Imgcodecs class

Imgcodecs imageCodecs = new Imgcodecs();

// File input = new File("C:/EXAMPLES/OpenCV/sample.jpg");

String input = "C:/EXAMPLES/OpenCV/sample.jpg";

// Reading the image

Mat src = imageCodecs.imread(input);

// Creating the destination matrix

Mat dst = new Mat();

// Converting to binary image...

Imgproc.threshold(src, dst, 200, 500, Imgproc.THRESH_BINARY);

// Extracting data from the transformed image (dst)

byte[] data1 = new byte[dst.rows() * dst.cols() * (int)(dst.elemSize())];

dst.get(0, 0, data1);

// Creating Buffered image using the data

BufferedImage bufImage = new BufferedImage(dst.cols(),dst.rows(),

BufferedImage.TYPE_BYTE_GRAY);

// Setting the data elements to the image

bufImage.getRaster().setDataElements(0, 0, dst.cols(), dst.rows(), data1);

// Creating a Writable image

WritableImage writableImage = SwingFXUtils.toFXImage(bufImage, null);

System.out.println("Converted to binary");

return writableImage;

}

public static void main(String args[]) throws Exception {

launch(args);

}

}

Input Image

Assume that following is the input image sample.jpg specified in the above program.

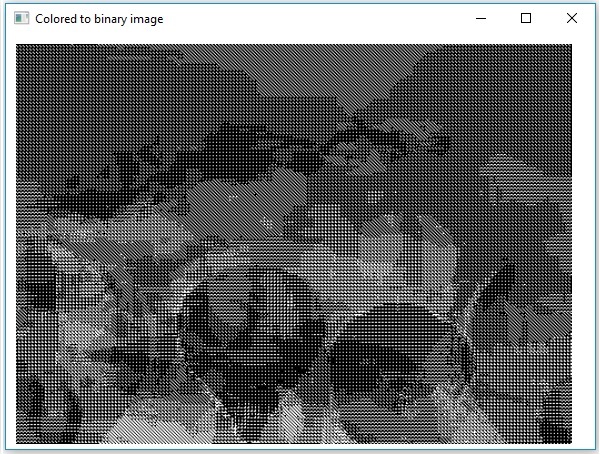

Output Image

On executing the program, you will get the following output.

OpenCV – Grayscale to Binary

You can use the same method mentioned in the previous chapter to convert a grayscale image to a binary image. Just pass the path for a grayscale image as input to this program.

Example

The following program demonstrates how to read a grayscale image as a binary image and display it using JavaFX window.

import java.awt.image.BufferedImage;

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.imgcodecs.Imgcodecs;

import org.opencv.imgproc.Imgproc;

import javafx.application.Application;

import javafx.embed.swing.SwingFXUtils;

import javafx.scene.Group;

import javafx.scene.Scene;

import javafx.scene.image.ImageView;

import javafx.scene.image.WritableImage;

import javafx.stage.Stage;

public class GrayScaleToBinary extends Application {

@Override

public void start(Stage stage) throws Exception {

WritableImage writableImage = loadAndConvert();

// Setting the image view

ImageView imageView = new ImageView(writableImage);

// Setting the position of the image

imageView.setX(10);

imageView.setY(10);

// Setting the fit height and width of the image view

imageView.setFitHeight(400);

imageView.setFitWidth(600);

// Setting the preserve ratio of the image view

imageView.setPreserveRatio(true);

// Creating a Group object

Group root = new Group(imageView);

// Creating a scene object

Scene scene = new Scene(root, 600, 400);

// Setting title to the Stage

stage.setTitle("Grayscale to binary image");

// Adding scene to the stage

stage.setScene(scene);

// Displaying the contents of the stage

stage.show();

}

public WritableImage loadAndConvert() throws Exception {

// Loading the OpenCV core library

System.loadLibrary( Core.NATIVE_LIBRARY_NAME );

// Instantiating the imagecodecs class

Imgcodecs imageCodecs = new Imgcodecs();

String input = "E:/OpenCV/chap7/grayscale.jpg";

// Reading the image

Mat src = imageCodecs.imread(input);

// Creating the destination matrix

Mat dst = new Mat();

// Converting to binary image...

Imgproc.threshold(src, dst, 200, 500, Imgproc.THRESH_BINARY);

// Extracting data from the transformed image (dst)

byte[] data1 = new byte[dst.rows() * dst.cols() * (int)(dst.elemSize())];

dst.get(0, 0, data1);

// Creating Buffered image using the data

BufferedImage bufImage = new BufferedImage(dst.cols(),dst.rows(),

BufferedImage.TYPE_BYTE_BINARY);

// Setting the data elements to the image

bufImage.getRaster().setDataElements(0, 0, dst.cols(), dst.rows(), data1);

// Creating a Writable image

WritableImage writableImage = SwingFXUtils.toFXImage(bufImage, null);

System.out.println("Converted to binary");

return writableImage;

}

public static void main(String args[]) throws Exception {

launch(args);

}

}

Input Image

Assume that following is the input image sample.jpg specified in the above program.

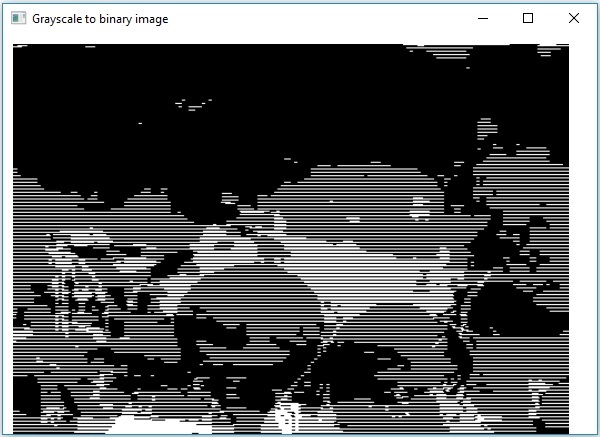

Output Image

On executing the program, you will get the following output.

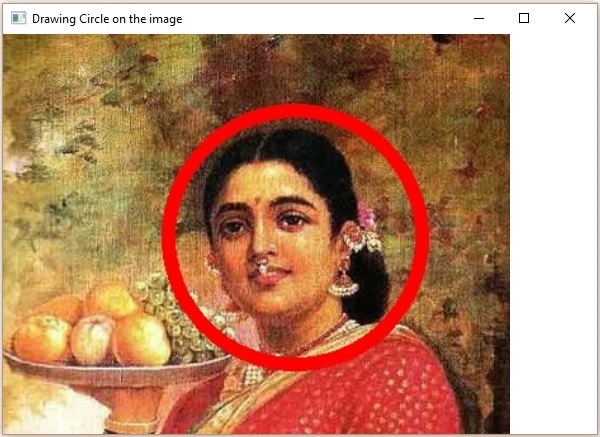

OpenCV – Drawing a Circle

You can draw various shapes like Circle, Rectangle, Line, Ellipse, Polylines, Convex, Polylines, Polylines on an image using the respective methods of the org.opencv.imgproc package.

You can draw a circle on an image using the method circle() of the imgproc class. Following is the syntax of this method −

circle(img, center, radius, color, thickness)

This method accepts the following parameters −

-

mat − A Mat object representing the image on which the circle is to be drawn.

-

point − A Point object representing the center of the circle.

-

radius − A variable of the type integer representing the radius of the circle.

-

scalar − A Scalar object representing the color of the circle. (BGR)

-

thickness − An integer representing the thickness of the circle; by default, the value of thickness is 1.

Example

The following program demonstrates how to draw a circle on an image and display it using JavaFX window.

import java.awt.image.BufferedImage;

import java.io.ByteArrayInputStream;

import java.io.InputStream;

import javax.imageio.ImageIO;

import javafx.application.Application;

import javafx.embed.swing.SwingFXUtils;

import javafx.scene.Group;

import javafx.scene.Scene;

import javafx.scene.image.ImageView;

import javafx.scene.image.WritableImage;

import javafx.stage.Stage;

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.core.MatOfByte;

import org.opencv.core.Point;

import org.opencv.core.Scalar;

import org.opencv.imgcodecs.Imgcodecs;

import org.opencv.imgproc.Imgproc;

public class DrawingCircle extends Application {

Mat matrix = null;

@Override

public void start(Stage stage) throws Exception {

// Capturing the snapshot from the camera

DrawingCircle obj = new DrawingCircle();

WritableImage writableImage = obj.LoadImage();

// Setting the image view

ImageView imageView = new ImageView(writableImage);

// setting the fit height and width of the image view

imageView.setFitHeight(600);

imageView.setFitWidth(600);

// Setting the preserve ratio of the image view

imageView.setPreserveRatio(true);

// Creating a Group object

Group root = new Group(imageView);

// Creating a scene object

Scene scene = new Scene(root, 600, 400);

// Setting title to the Stage

stage.setTitle("Drawing Circle on the image");

// Adding scene to the stage

stage.setScene(scene);

// Displaying the contents of the stage

stage.show();

}

public WritableImage LoadImage() throws Exception {

// Loading the OpenCV core library

System.loadLibrary( Core.NATIVE_LIBRARY_NAME );

// Reading the Image from the file and storing it in to a Matrix object

String file ="E:/OpenCV/chap8/input.jpg";

Mat matrix = Imgcodecs.imread(file);

//Drawing a Circle

Imgproc.circle (

matrix, //Matrix obj of the image

new Point(230, 160), //Center of the circle

100, //Radius

new Scalar(0, 0, 255), //Scalar object for color

10 //Thickness of the circle

);

// Encoding the image

MatOfByte matOfByte = new MatOfByte();

Imgcodecs.imencode(".jpg", matrix, matOfByte);

// Storing the encoded Mat in a byte array

byte[] byteArray = matOfByte.toArray();

// Displaying the image

InputStream in = new ByteArrayInputStream(byteArray);

BufferedImage bufImage = ImageIO.read(in);

this.matrix = matrix;

// Creating the Writable Image

WritableImage writableImage = SwingFXUtils.toFXImage(bufImage, null);

return writableImage;

}

public static void main(String args[]) {

launch(args);

}

}

On executing the above program, you will get the following output −

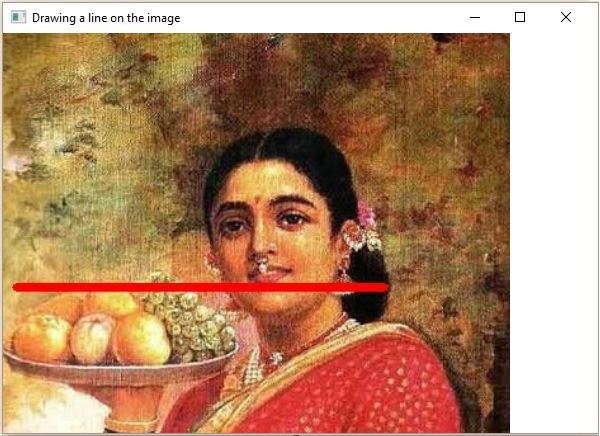

OpenCV – Drawing a Line

You can draw a line on an image using the method line() of the imgproc class. Following is the syntax of this method.

line(img, pt1, pt2, color, thickness)

This method accepts the following parameters −

-

mat − A Mat object representing the image on which the line is to be drawn.

-

pt1 and pt2 − Two Point objects representing the points between which the line is to be drawn.

-

scalar − A Scalar object representing the color of the circle. (BGR)

-

thickness − An integer representing the thickness of the line; by default, the value of thickness is 1.

Example

The following program demonstrates how to draw a line on an image and display it using JavaFX window.

import java.awt.image.BufferedImage;

import java.io.ByteArrayInputStream;

import java.io.InputStream;

import javax.imageio.ImageIO;

import javafx.application.Application;

import javafx.embed.swing.SwingFXUtils;

import javafx.scene.Group;

import javafx.scene.Scene;

import javafx.scene.image.ImageView;

import javafx.scene.image.WritableImage;

import javafx.stage.Stage;

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.core.MatOfByte;

import org.opencv.core.Point;

import org.opencv.core.Scalar;

import org.opencv.imgcodecs.Imgcodecs;

import org.opencv.imgproc.Imgproc;

public class DrawingLine extends Application {

Mat matrix = null;

@Override

public void start(Stage stage) throws Exception {

// Capturing the snapshot from the camera

DrawingLine obj = new DrawingLine();

WritableImage writableImage = obj.LoadImage();

// Setting the image view

ImageView imageView = new ImageView(writableImage);

// setting the fit height and width of the image view

imageView.setFitHeight(600);

imageView.setFitWidth(600);

// Setting the preserve ratio of the image view

imageView.setPreserveRatio(true);

// Creating a Group object

Group root = new Group(imageView);

// Creating a scene object

Scene scene = new Scene(root, 600, 400);

// Setting title to the Stage

stage.setTitle("Drawing a line on the image");

// Adding scene to the stage

stage.setScene(scene);

// Displaying the contents of the stage

stage.show();

}

public WritableImage LoadImage() throws Exception {

// Loading the OpenCV core library

System.loadLibrary( Core.NATIVE_LIBRARY_NAME );

// Reading the Image from the file and storing it in to a Matrix object

String file ="E:/OpenCV/chap8/input.jpg";

Mat matrix = Imgcodecs.imread(file);

// Drawing a line

Imgproc.line (

matrix, //Matrix obj of the image

new Point(10, 200), //p1

new Point(300, 200), //p2

new Scalar(0, 0, 255), //Scalar object for color

5 //Thickness of the line

);

// Encoding the image

MatOfByte matOfByte = new MatOfByte();

Imgcodecs.imencode(".jpg", matrix, matOfByte);

// Storing the encoded Mat in a byte array

byte[] byteArray = matOfByte.toArray();

// Displaying the image

InputStream in = new ByteArrayInputStream(byteArray);

BufferedImage bufImage = ImageIO.read(in);

this.matrix = matrix;

// Creating the Writable Image

WritableImage writableImage = SwingFXUtils.toFXImage(bufImage, null);

return writableImage;

}

public static void main(String args[]) {

launch(args);

}

}

On executing the above program, you will get the following output −

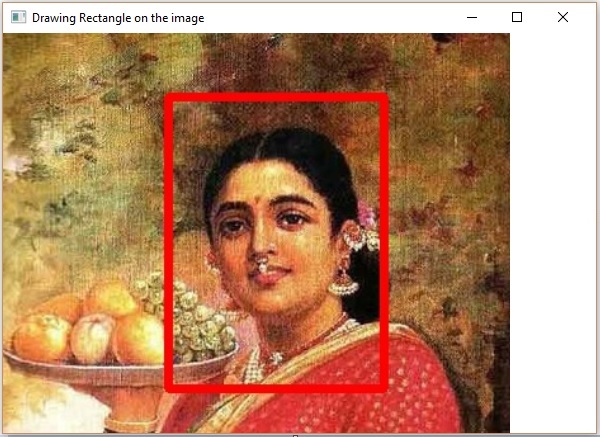

OpenCV – Drawing a Rectangle

You can draw a rectangle on an image using the method rectangle() of the imgproc class. Following is the syntax of this method −

rectangle(img, pt1, pt2, color, thickness)

This method accepts the following parameters −

-

mat − A Mat object representing the image on which the rectangle is to be drawn.

-

pt1 and pt2 − Two Point objects representing the vertices of the rectangle that is to be drawn.

-

scalar − A Scalar object representing the color of the rectangle. (BGR)

-

thickness − An integer representing the thickness of the rectangle; by default, the value of thickness is 1.

Example

The following example demonstrates how to draw a rectangle on an image and display it using JavaFX window.

import java.awt.image.BufferedImage;

import java.io.ByteArrayInputStream;

import java.io.InputStream;

import javax.imageio.ImageIO;

import javafx.application.Application;

import javafx.embed.swing.SwingFXUtils;

import javafx.scene.Group;

import javafx.scene.Scene;

import javafx.scene.image.ImageView;

import javafx.scene.image.WritableImage;

import javafx.stage.Stage;

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.core.MatOfByte;

import org.opencv.core.Point;

import org.opencv.core.Scalar;

import org.opencv.imgcodecs.Imgcodecs;

import org.opencv.imgproc.Imgproc;

public class DrawingRectangle extends Application {

Mat matrix = null;

@Override

public void start(Stage stage) throws Exception {

// Capturing the snapshot from the camera

DrawingRectangle obj = new DrawingRectangle();

WritableImage writableImage = obj.LoadImage();

// Setting the image view

ImageView imageView = new ImageView(writableImage);

// setting the fit height and width of the image view

imageView.setFitHeight(600);

imageView.setFitWidth(600);

// Setting the preserve ratio of the image view

imageView.setPreserveRatio(true);

// Creating a Group object

Group root = new Group(imageView);

// Creating a scene object

Scene scene = new Scene(root, 600, 400);

// Setting title to the Stage

stage.setTitle("Drawing Rectangle on the image");

// Adding scene to the stage

stage.setScene(scene);

// Displaying the contents of the stage

stage.show();

}

public WritableImage LoadImage() throws Exception {

// Loading the OpenCV core library

System.loadLibrary( Core.NATIVE_LIBRARY_NAME );

// Reading the Image from the file and storing it in to a Matrix object

String file ="E:/OpenCV/chap8/input.jpg";

Mat matrix = Imgcodecs.imread(file);

// Drawing a Rectangle

Imgproc.rectangle (

matrix, //Matrix obj of the image

new Point(130, 50), //p1

new Point(300, 280), //p2

new Scalar(0, 0, 255), //Scalar object for color

5 //Thickness of the line

);

// Encoding the image

MatOfByte matOfByte = new MatOfByte();

Imgcodecs.imencode(".jpg", matrix, matOfByte);

// Storing the encoded Mat in a byte array

byte[] byteArray = matOfByte.toArray();

// Displaying the image

InputStream in = new ByteArrayInputStream(byteArray);

BufferedImage bufImage = ImageIO.read(in);

this.matrix = matrix;

// Creating the Writable Image

WritableImage writableImage = SwingFXUtils.toFXImage(bufImage, null);

return writableImage;

}

public static void main(String args[]) {

launch(args);

}

}

On executing the above program, you will get the following output −

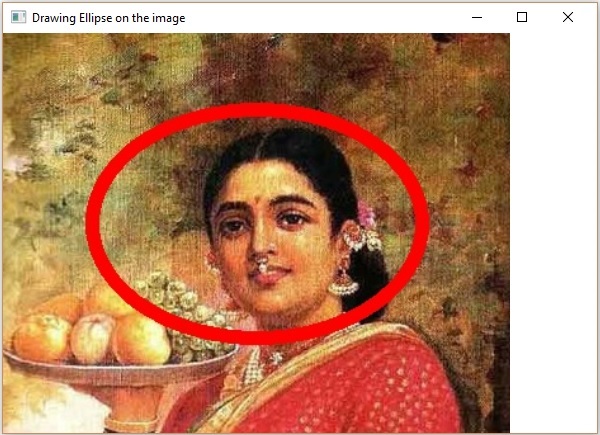

OpenCV – Drawing an Ellipse

You can draw an ellipse on an image using the method rectangle() of the imgproc class. Following is the syntax of this method −

ellipse(img, box, color, thickness)

This method accepts the following parameters −

-

mat − A Mat object representing the image on which the Rectangle is to be drawn.

-

box − A RotatedRect object (The ellipse is drawn inscribed in this rectangle.)

-

scalar − A Scalar object representing the color of the Rectangle. (BGR)

-

thickness − An integer representing the thickness of the Rectangle; by default, the value of thickness is 1.

The constructor of the RotatedRect class accepts an object of the class Point, an object of the class Size, and a variable of the type double, as shown below.

RotatedRect(Point c, Size s, double a)

Example

The following program demonstrates how to draw an ellipse on an image and display it using JavaFX window.

import java.awt.image.BufferedImage;

import java.io.ByteArrayInputStream;

import java.io.InputStream;

import javax.imageio.ImageIO;

import javafx.application.Application;

import javafx.embed.swing.SwingFXUtils;

import javafx.scene.Group;

import javafx.scene.Scene;

import javafx.scene.image.ImageView;

import javafx.scene.image.WritableImage;

import javafx.stage.Stage;

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.core.MatOfByte;

import org.opencv.core.Point;

import org.opencv.core.RotatedRect;

import org.opencv.core.Scalar;

import org.opencv.core.Size;

import org.opencv.imgcodecs.Imgcodecs;

import org.opencv.imgproc.Imgproc;

public class DrawingEllipse extends Application {

Mat matrix = null;

@Override

public void start(Stage stage) throws Exception {

// Capturing the snapshot from the camera

DrawingEllipse obj = new DrawingEllipse();

WritableImage writableImage = obj.LoadImage();

// Setting the image view

ImageView imageView = new ImageView(writableImage);

// setting the fit height and width of the image view

imageView.setFitHeight(600);

imageView.setFitWidth(600);

// Setting the preserve ratio of the image view

imageView.setPreserveRatio(true);

// Creating a Group object

Group root = new Group(imageView);

// Creating a scene object

Scene scene = new Scene(root, 600, 400);

// Setting title to the Stage

stage.setTitle("Drawing Ellipse on the image");

// Adding scene to the stage

stage.setScene(scene);

// Displaying the contents of the stage

stage.show();

}

public WritableImage LoadImage() throws Exception {

// Loading the OpenCV core library

System.loadLibrary( Core.NATIVE_LIBRARY_NAME );

// Reading the Image from the file and storing it in to a Matrix object

String file ="E:/OpenCV/chap8/input.jpg";

Mat matrix = Imgcodecs.imread(file);

// Drawing an Ellipse

Imgproc.ellipse (

matrix, //Matrix obj of the image

new RotatedRect ( // RotatedRect(Point c, Size s, double a)

new Point(200, 150),

new Size(260, 180), 180

),

new Scalar(0, 0, 255), //Scalar object for color

10 //Thickness of the line

);

// Encoding the image

MatOfByte matOfByte = new MatOfByte();

Imgcodecs.imencode(".jpg", matrix, matOfByte);

// Storing the encoded Mat in a byte array

byte[] byteArray = matOfByte.toArray();

// Displaying the image

InputStream in = new ByteArrayInputStream(byteArray);

BufferedImage bufImage = ImageIO.read(in);

this.matrix = matrix;

// Creating the Writable Image

WritableImage writableImage = SwingFXUtils.toFXImage(bufImage, null);

return writableImage;

}

public static void main(String args[]) {

launch(args);

}

}

On executing the above program, you will get the following output −

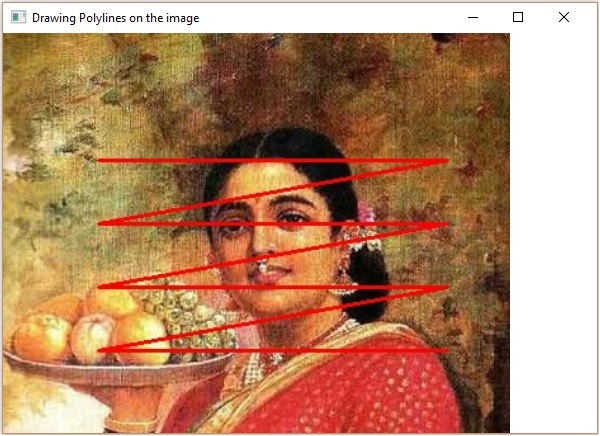

OpenCV – Drawing Polylines

You can draw Polylines on an image using the method polylines() of the imgproc class. Following is the syntax of this method.

polylines(img, pts, isClosed, color, thickness)

This method accepts the following parameters −

-

mat − A Mat object representing the image on which the Polylines are to be drawn.

-

pts − A List object holding the objects of the type MatOfPoint.

-

isClosed − A parameter of the type boolean specifying weather the polylines are closed.

-

scalar − A Scalar object representing the color of the Polylines. (BGR)

-

thickness − An integer representing the thickness of the Polylines; by default, the value of thickness is 1.

The constructor of the MatOfPoint class accepts objects of the class Point.

MatOfPoint(Point... a)

Example

The following program demonstrates how to draw polylines on an image and display it using JavaFX window.

import java.awt.image.BufferedImage;

import java.io.ByteArrayInputStream;

import java.io.InputStream;

import java.util.ArrayList;

import java.util.List;

import javax.imageio.ImageIO;

import javafx.application.Application;

import javafx.embed.swing.SwingFXUtils;

import javafx.scene.Group;

import javafx.scene.Scene;

import javafx.scene.image.ImageView;

import javafx.scene.image.WritableImage;

import javafx.stage.Stage;

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.core.MatOfByte;

import org.opencv.core.MatOfPoint;

import org.opencv.core.Point;

import org.opencv.core.Scalar;

import org.opencv.imgcodecs.Imgcodecs;

import org.opencv.imgproc.Imgproc;

public class DrawingPolyLines extends Application {

Mat matrix = null;

@Override

public void start(Stage stage) throws Exception {

// Capturing the snapshot from the camera

DrawingPolyLines obj = new DrawingPolyLines();

WritableImage writableImage = obj.LoadImage();

// Setting the image view

ImageView imageView = new ImageView(writableImage);

// setting the fit height and width of the image view

imageView.setFitHeight(600);

imageView.setFitWidth(600);

// Setting the preserve ratio of the image view

imageView.setPreserveRatio(true);

// Creating a Group object

Group root = new Group(imageView);

// Creating a scene object

Scene scene = new Scene(root, 600, 400);

// Setting title to the Stage

stage.setTitle("Drawing Polylines on the image");

// Adding scene to the stage

stage.setScene(scene);

// Displaying the contents of the stage

stage.show();

}

public WritableImage LoadImage() throws Exception {

// Loading the OpenCV core library

System.loadLibrary( Core.NATIVE_LIBRARY_NAME );

// Reading the Image from the file and storing it in to a Matrix object

String file ="E:/OpenCV/chap8/input.jpg";

Mat matrix = Imgcodecs.imread(file);

List<MatOfPoint> list = new ArrayList();

list.add(

new MatOfPoint (

new Point(75, 100), new Point(350, 100),

new Point(75, 150), new Point(350, 150),

new Point(75, 200), new Point(350, 200),

new Point(75, 250), new Point(350, 250)

)

);

// Drawing polylines

Imgproc.polylines (

matrix, // Matrix obj of the image

list, // java.util.List<MatOfPoint> pts

false, // isClosed

new Scalar(0, 0, 255), // Scalar object for color

2 // Thickness of the line

);

// Encoding the image

MatOfByte matOfByte = new MatOfByte();

Imgcodecs.imencode(".jpg", matrix, matOfByte);

// Storing the encoded Mat in a byte array

byte[] byteArray = matOfByte.toArray();

// Displaying the image

InputStream in = new ByteArrayInputStream(byteArray);

BufferedImage bufImage = ImageIO.read(in);

this.matrix = matrix;

// Creating the Writable Image

WritableImage writableImage = SwingFXUtils.toFXImage(bufImage, null);

return writableImage;

}

public static void main(String args[]) {

launch(args);

}

}

On executing the above program, you will get the following output −

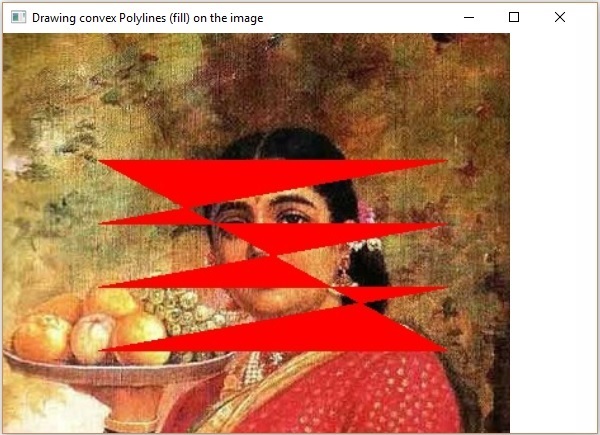

OpenCV – Drawing Convex Polylines

You can draw convex polylines on an image using the method fillconvexPoly() of the imgproc class. Following is the syntax of this method.

fillConvexPoly(Mat img, MatOfPoint points, Scalar color)

This method accepts the following parameters −

-

mat − A Mat object representing the image on which the convex Polylines are to be drawn.

-

points − A MatOfPoint object representing points between which the convex polylines are to be drawn.

-

scalar − A Scalar object representing the color of the convex Polylines. (BGR)

The constructor of the MatOfPoint class accepts objects of the class Point.

MatOfPoint(Point... a)

Example

The following program demonstrates how to draw convex polylines on an image and display it using JavaFX window.

import java.awt.image.BufferedImage;

import java.io.ByteArrayInputStream;

import java.io.InputStream;

import javax.imageio.ImageIO;

import javafx.application.Application;

import javafx.embed.swing.SwingFXUtils;

import javafx.scene.Group;

import javafx.scene.Scene;

import javafx.scene.image.ImageView;

import javafx.scene.image.WritableImage;

import javafx.stage.Stage;

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.core.MatOfByte;

import org.opencv.core.MatOfPoint;

import org.opencv.core.Point;

import org.opencv.core.Scalar;

import org.opencv.imgcodecs.Imgcodecs;

import org.opencv.imgproc.Imgproc;

public class FillConvexPoly extends Application {

Mat matrix = null;

@Override

public void start(Stage stage) throws Exception {

// Capturing the snapshot from the camera

FillConvexPoly obj = new FillConvexPoly();

WritableImage writableImage = obj.LoadImage();

// Setting the image view

ImageView imageView = new ImageView(writableImage);

// setting the fit height and width of the image view

imageView.setFitHeight(600);

imageView.setFitWidth(600);

//Setting the preserve ratio of the image view

imageView.setPreserveRatio(true);

// Creating a Group object

Group root = new Group(imageView);

// Creating a scene object

Scene scene = new Scene(root, 600, 400);

// Setting title to the Stage

stage.setTitle("Drawing convex Polylines (fill) on the image");

// Adding scene to the stage

stage.setScene(scene);

// Displaying the contents of the stage

stage.show();

}

public WritableImage LoadImage() throws Exception {

// Loading the OpenCV core library

System.loadLibrary( Core.NATIVE_LIBRARY_NAME );

// Reading the Image from the file and storing it in to a Matrix object

String file ="E:/OpenCV/chap8/input.jpg";

Mat matrix = Imgcodecs.imread(file);

MatOfPoint matOfPoint = new MatOfPoint (

new Point(75, 100), new Point(350, 100),

new Point(75, 150), new Point(350, 150),

new Point(75, 200), new Point(350, 200),

new Point(75, 250), new Point(350, 250)

);

// Drawing polylines

Imgproc.fillConvexPoly (

matrix, // Matrix obj of the image

matOfPoint, // java.util.List<MatOfPoint> pts

new Scalar(0, 0, 255) // Scalar object for color

);

// Encoding the image

MatOfByte matOfByte = new MatOfByte();

Imgcodecs.imencode(".jpg", matrix, matOfByte);

// Storing the encoded Mat in a byte array

byte[] byteArray = matOfByte.toArray();

// Displaying the image

InputStream in = new ByteArrayInputStream(byteArray);

BufferedImage bufImage = ImageIO.read(in);

this.matrix = matrix;

// Creating the Writable Image

WritableImage writableImage = SwingFXUtils.toFXImage(bufImage, null);

return writableImage;

}

public static void main(String args[]) {

launch(args);

}

}

On executing the above program, you will get the following output −

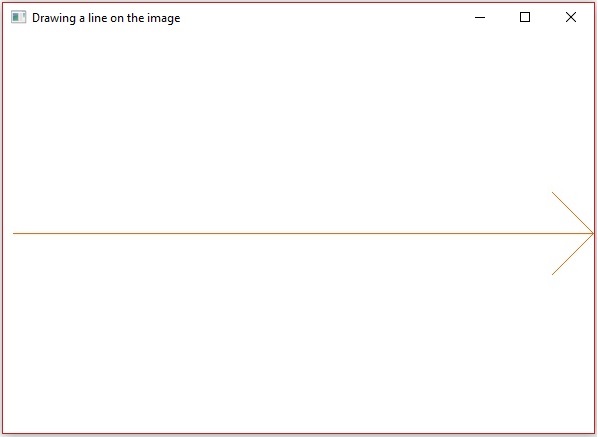

OpenCV – Drawing Arrowed Lines

You can draw an arrowed line on an image using the method arrowedLine() of the imgproc class. Following is the syntax of this method −

arrowedLine(Mat img, Point pt1, Point pt2, Scalar color)

This method accepts the following parameters −

-

mat − A Mat object representing the image on which the arrowed line is to be drawn.

-

pt1 and pt2 − Two Point objects representing the points between which the arrowed line is to be drawn.

-

scalar − A Scalar object representing the color of the arrowed line. (BGR)

Example

The following program demonstrates how to draw arrowed line on an image and display it using JavaFX window.

import java.awt.image.BufferedImage;

import java.io.ByteArrayInputStream;

import java.io.InputStream;

import javax.imageio.ImageIO;

import javafx.application.Application;

import javafx.embed.swing.SwingFXUtils;

import javafx.scene.Group;

import javafx.scene.Scene;

import javafx.scene.image.ImageView;

import javafx.scene.image.WritableImage;

import javafx.stage.Stage;

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.core.MatOfByte;

import org.opencv.core.Point;

import org.opencv.core.Scalar;

import org.opencv.imgcodecs.Imgcodecs;

import org.opencv.imgproc.Imgproc;

public class DrawingArrowedLine extends Application {

Mat matrix = null;

@Override

public void start(Stage stage) throws Exception {

// Capturing the snapshot from the camera

DrawingArrowedLine obj = new DrawingArrowedLine();

WritableImage writableImage = obj.LoadImage();

// Setting the image view

ImageView imageView = new ImageView(writableImage);

// setting the fit height and width of the image view

imageView.setFitHeight(600);

imageView.setFitWidth(600);

// Setting the preserve ratio of the image view

imageView.setPreserveRatio(true);

// Creating a Group object

Group root = new Group(imageView);

// Creating a scene object

Scene scene = new Scene(root, 600, 400);

// Setting title to the Stage

stage.setTitle("Drawing a line on the image");

// Adding scene to the stage

stage.setScene(scene);

// Displaying the contents of the stage

stage.show();

}

public WritableImage LoadImage() throws Exception {

// Loading the OpenCV core library

System.loadLibrary( Core.NATIVE_LIBRARY_NAME );

// Reading the Image from the file and storing it in to a Matrix object

String file ="C:/EXAMPLES/OpenCV/Aish.jpg";

Mat matrix = Imgcodecs.imread(file);

//Drawing a line

Imgproc.arrowedLine(

matrix, // Matrix obj of the image

new Point(10, 200), // p1

new Point(590, 200), // p2

new Scalar(0, 100, 255) // Scalar object for color

);

// arrowedLine(Mat img, Point pt1, Point pt2, Scalar color)

// Encoding the image

MatOfByte matOfByte = new MatOfByte();

Imgcodecs.imencode(".jpg", matrix, matOfByte);

// Storing the encoded Mat in a byte array

byte[] byteArray = matOfByte.toArray();

// Displaying the image

InputStream in = new ByteArrayInputStream(byteArray);

BufferedImage bufImage = ImageIO.read(in);

this.matrix = matrix;

// Creating the Writable Image

WritableImage writableImage = SwingFXUtils.toFXImage(bufImage, null);

return writableImage;

}

public static void main(String args[]) {

launch(args);

}

}

On executing the above program, you will get the following output −

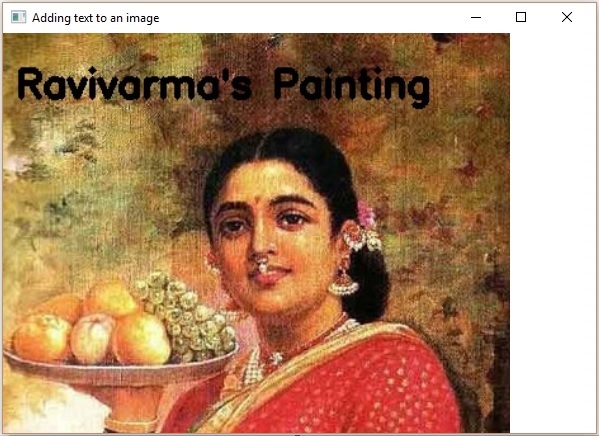

OpenCV – Adding Text

You can add text to an image using the method arrowedLine() of the imgproc class. Following is the syntax of this method.

putText(img, text, org, fontFace, fontScale, Scalar color, int thickness)

This method accepts the following parameters −

-

mat − A Mat object representing the image to which the text is to be added.

-

text − A string variable of representing the text that is to be added.

-

org − A Point object representing the bottom left corner text string in the image.

-

fontFace − A variable of the type integer representing the font type.

-

fontScale − A variable of the type double representing the scale factor that is multiplied by the font-specific base size.

-

scalar − A Scalar object representing the color of the text that is to be added. (BGR)

-

thickness − An integer representing the thickness of the line by default, the value of thickness is 1.

Example

The following program demonstrates how to add text to an image and display it using JavaFX window.

import java.awt.image.BufferedImage;

import java.io.ByteArrayInputStream;

import java.io.InputStream;

import javax.imageio.ImageIO;

import javafx.application.Application;

import javafx.embed.swing.SwingFXUtils;

import javafx.scene.Group;

import javafx.scene.Scene;

import javafx.scene.image.ImageView;

import javafx.scene.image.WritableImage;

import javafx.stage.Stage;

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.core.MatOfByte;

import org.opencv.core.Point;

import org.opencv.core.Scalar;

import org.opencv.imgcodecs.Imgcodecs;

import org.opencv.imgproc.Imgproc;

public class AddingTextToImage extends Application {

Mat matrix = null;

@Override

public void start(Stage stage) throws Exception {

// Capturing the snapshot from the camera

AddingTextToImage obj = new AddingTextToImage();

WritableImage writableImage = obj.LoadImage();

// Setting the image view

ImageView imageView = new ImageView(writableImage);

// setting the fit height and width of the image view

imageView.setFitHeight(600);

imageView.setFitWidth(600);

// Setting the preserve ratio of the image view

imageView.setPreserveRatio(true);

// Creating a Group object

Group root = new Group(imageView);

// Creating a scene object

Scene scene = new Scene(root, 600, 400);

// Setting title to the Stage

stage.setTitle("Adding text to an image");

// Adding scene to the stage

stage.setScene(scene);

// Displaying the contents of the stage

stage.show();

}

public WritableImage LoadImage() throws Exception {

// Loading the OpenCV core library

System.loadLibrary( Core.NATIVE_LIBRARY_NAME );

// Reading the Image from the file and storing it in to a Matrix object

String file ="E:/OpenCV/chap8/input.jpg";

Mat matrix = Imgcodecs.imread(file);

// Adding Text

Imgproc.putText (

matrix, // Matrix obj of the image

"Ravivarma''s Painting", // Text to be added

new Point(10, 50), // point