Microsoft Cognitive Toolkit (CNTK) – Introduction

In this chapter, we will learn what is CNTK, its features, difference between its version 1.0 and 2.0 and important highlights of version 2.7.

What is Microsoft Cognitive Toolkit (CNTK)?

Microsoft Cognitive Toolkit (CNTK), formerly known as Computational Network Toolkit, is a free, easy-to-use, open-source, commercial-grade toolkit that enables us to train deep learning algorithms to learn like the human brain. It enables us to create some popular deep learning systems like feed-forward neural network time series prediction systems and Convolutional neural network (CNN) image classifiers.

For optimal performance, its framework functions are written in C++. Although we can call its function using C++, but the most commonly used approach for the same is to use a Python program.

CNTK’s Features

Following are some of the features and capabilities offered in the latest version of Microsoft CNTK:

Built-in components

-

CNTK has highly optimised built-in components that can handle multi-dimensional dense or sparse data from Python, C++ or BrainScript.

-

We can implement CNN, FNN, RNN, Batch Normalisation and Sequence-to-Sequence with attention.

-

It provides us the functionality to add new user-defined core-components on the GPU from Python.

-

It also provides automatic hyperparameter tuning.

-

We can implement Reinforcement learning, Generative Adversarial Networks (GANs), Supervised as well as Unsupervised learning.

-

For massive datasets, CNTK has built-in optimised readers.

Usage of resources efficiently

-

CNTK provides us parallelism with high accuracy on multiple GPUs/machines via 1-bit SGD.

-

To fit the largest models in GPU memory, it provides memory sharing and other built-in methods.

Express our own networks easily

-

CNTK has full APIs for defining your own network, learners, readers, training and evaluation from Python, C++, and BrainScript.

-

Using CNTK, we can easily evaluate models with Python, C++, C# or BrainScript.

-

It provides both high-level as well as low-level APIs.

-

Based on our data, it can automatically shape the inference.

-

It has fully optimised symbolic Recurrent Neural Network (RNN) loops.

Measuring model performance

-

CNTK provides various components to measure the performance of neural networks you build.

-

Generates log data from your model and the associated optimiser, which we can use to monitor the training process.

Version 1.0 vs Version 2.0

Following table compares CNTK Version 1.0 and 2.0:

| Version 1.0 | Version 2.0 |

|---|---|

| It was released in 2016. | It is a significant rewrite of the 1.0 Version and was released in June 2017. |

| It used a proprietary scripting language called BrainScript. | Its framework functions can be called using C++, Python. We can easily load our modules in C# or Java. BrainScript is also supported by Version 2.0. |

| It runs on both Windows and Linux systems but not directly on Mac OS. | It also runs on both Windows (Win 8.1, Win 10, Server 2012 R2 and later) and Linux systems but not directly on Mac OS. |

Important Highlights of Version 2.7

Version 2.7 is the last main released version of Microsoft Cognitive Toolkit. It has full support for ONNX 1.4.1. Following are some important highlights of this last released version of CNTK.

-

Full support for ONNX 1.4.1.

-

Support for CUDA 10 for both Windows and Linux systems.

-

It supports advance Recurrent Neural Networks (RNN) loop in ONNX export.

-

It can export more than 2GB models in ONNX format.

-

It supports FP16 in BrainScript scripting language’s training action.

Microsoft Cognitive Toolkit (CNTK) – Getting Started

Here, we will understand about the installation of CNTK on Windows and on Linux. Moreover, the chapter explains installing CNTK package, steps to install Anaconda, CNTK files, directory structure and CNTK library organisation.

Prerequisites

In order to install CNTK, we must have Python installed on our computers. You can go to the link and select the latest version for your OS, i.e. Windows and Linux/Unix. For basic tutorial on Python, you can refer to the link .

CNTK is supported for Windows as well as Linux so we will walk through both of them.

Installing on Windows

In order to run CNTK on Windows, we will be using the Anaconda version of Python. We know that, Anaconda is a redistribution of Python. It includes additional packages like Scipy andScikit-learn which are used by CNTK to perform various useful calculations.

So, first let see the steps to install Anaconda on your machine −

Step 1−First download the setup files from the public website .

Step 2 − Once you downloaded the setup files, start the installation and follow the instructions from the link .

Step 3 − Once installed, Anaconda will also install some other utilities, which will automatically include all the Anaconda executables in your computer PATH variable. We can manage our Python environment from this prompt, can install packages and run Python scripts.

Installing CNTK package

Once Anaconda installation is done, you can use the most common way to install the CNTK package through the pip executable by using following command −

pip install cntk

There are various other methods to install Cognitive Toolkit on your machine. Microsoft has a neat set of documentation that explains the other installation methods in detail. Please follow the link .

Installing on Linux

Installation of CNTK on Linux is a bit different from its installation on Windows. Here, for Linux we are going to use Anaconda to install CNTK, but instead of a graphical installer for Anaconda, we will be using a terminal-based installer on Linux. Although, the installer will work with almost all Linux distributions, we limited the description to Ubuntu.

So, first let see the steps to install Anaconda on your machine −

Steps to install Anaconda

Step 1 − Before installing Anaconda, make sure that the system is fully up to date. To check, first execute the following two commands inside a terminal −

sudo apt update sudo apt upgrade

Step 2 − Once the computer is updated, get the URL from the public website for the latest Anaconda installation files.

Step 3 − Once URL is copied, open a terminal window and execute the following command −

wget -0 anaconda-installer.sh url SHAPE * MERGEFORMAT

y

f

x

| }

Replace the url placeholder with the URL copied from the Anaconda website.

Step 4 − Next, with the help of following command, we can install Anaconda −

sh ./anaconda-installer.sh

The above command will by default install Anaconda3 inside our home directory.

Installing CNTK package

Once Anaconda installation is done, you can use the most common way to install the CNTK package through the pip executable by using following command −

pip install cntk

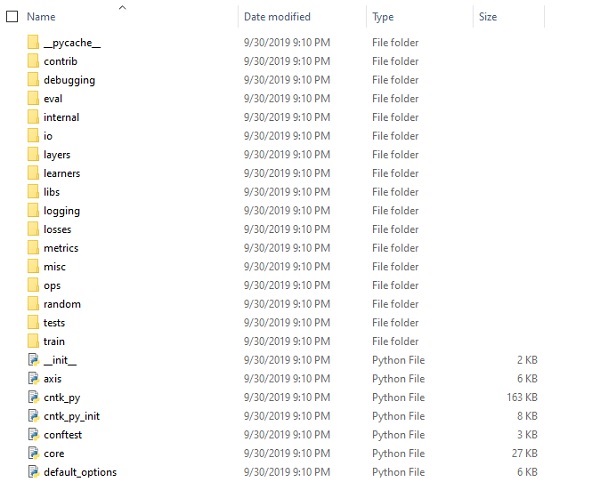

Examining CNTK files & directory structure

Once CNTK is installed as a Python package, we can examine its file and directory structure. It’s at C:Users

Verifying CNTK installation

Once CNTK is installed as a Python package, you should verify that CNTK has been installed correctly. From Anaconda command shell, start Python interpreter by entering ipython. Then, import CNTK by entering the following command.

import cntk as c

Once imported, check its version with the help of following command −

print(c.__version__)

The interpreter will respond with installed CNTK version. If it doesn’t respond, there will be a problem with the installation.

The CNTK library organisation

CNTK, a python package technically, is organised into 13 high-level sub-packages and 8 smaller sub-packages. Following table consist of the 10 most frequently used packages:

| Sr.No | Package Name & Description |

|---|---|

| 1 |

cntk.io Contains functions for reading data. For example: next_minibatch() |

| 2 |

cntk.layers Contains high-level functions for creating neural networks. For example: Dense() |

| 3 |

cntk.learners Contains functions for training. For example: sgd() |

| 4 |

cntk.losses Contains functions to measure training error. For example: squared_error() |

| 5 |

cntk.metrics Contains functions to measure model error. For example: classificatoin_error |

| 6 |

cntk.ops Contains low-level functions for creating neural networks. For example: tanh() |

| 7 |

cntk.random Contains functions to generate random numbers. For example: normal() |

| 8 |

cntk.train Contains training functions. For example: train_minibatch() |

| 9 |

cntk.initializer Contains model parameter initializers. For example: normal() and uniform() |

| 10 |

cntk.variables Contains low-level constructs. For example: Parameter() and Variable() |

Microsoft Cognitive Toolkit (CNTK) – CPU and GPU

Microsoft Cognitive Toolkit offers two different build versions namely CPU-only and GPU-only.

CPU only build version

The CPU-only build version of CNTK uses the optimised Intel MKLML, where MKLML is the subset of MKL (Math Kernel Library) and released with Intel MKL-DNN as a terminated version of Intel MKL for MKL-DNN.

GPU only build version

On the other hand, the GPU-only build version of CNTK uses highly optimised NVIDIA libraries such as CUB and cuDNN. It supports distributed training across multiple GPUs and multiple machines. For even faster distributed training in CNTK, the GPU-build version also includes −

-

MSR-developed 1bit-quantized SGD.

-

Block-momentum SGD parallel training algorithms.

Enabling GPU with CNTK on Windows

In the previous section, we saw how to install the basic version of CNTK to use with the CPU. Now let’s discuss how we can install CNTK to use with a GPU. But, before getting deep dive into it, first you should have a supported graphics card.

At present, CNTK supports the NVIDIA graphics card with at least CUDA 3.0 support. To make sure, you can check at whether your GPU supports CUDA.

So, let us see the steps to enable GPU with CNTK on Windows OS −

Step 1 − Depending on the graphics card you are using, first you need to have the latest GeForce or Quadro drivers for your graphics card.

Step 2 − Once you downloaded the drivers, you need to install the CUDA toolkit Version 9.0 for Windows from NVIDIA website . After installing, run the installer and follow the instructions.

Step 3 − Next, you need to install cuDNN binaries from NVIDIA website . With CUDA 9.0 version, cuDNN 7.4.1 works well. Basically, cuDNN is a layer on the top of CUDA, used by CNTK.

Step 4 − After downloading the cuDNN binaries, you need to extract the zip file into the root folder of your CUDA toolkit installation.

Step 5 − This is the last step which will enable GPU usage inside CNTK. Execute the following command inside the Anaconda prompt on Windows OS −

pip install cntk-gpu

Enabling GPU with CNTK on Linux

Let us see how we can enable GPU with CNTK on Linux OS −

Downloading the CUDA toolkit

First, you need to install the CUDA toolkit from NVIDIA website .

Running the installer

Now, once you have binaries on the disk, run the installer by opening a terminal and executing the following command and the instruction on screen −

sh cuda_9.0.176_384.81_linux-run

Modify Bash profile script

After installing CUDA toolkit on your Linux machine, you need to modify the BASH profile script. For this, first open the $HOME/ .bashrc file in text editor. Now, at the end of the script, include the following lines −

export PATH=/usr/local/cuda-9.0/bin${PATH:+:${PATH}}

export LD_LIBRARY_PATH=/usr/local/cuda-9.0/lib64

${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}

Installing

Installing cuDNN libraries

At last we need to install cuDNN binaries. It can be downloaded from NVIDIA website . With CUDA 9.0 version, cuDNN 7.4.1 works well. Basically, cuDNN is a layer on the top of CUDA, used by CNTK.

Once downloaded the version for Linux, extract it to the /usr/local/cuda-9.0 folder by using the following command −

tar xvzf -C /usr/local/cuda-9.0/ cudnn-9.0-linux-x64-v7.4.1.5.tgz

Change the path to the filename as required.

CNTK – Sequence Classification

In this chapter, we will learn in detail about the sequences in CNTK and its classification.

Tensors

The concept on which CNTK works is tensor. Basically, CNTK inputs, outputs as well as parameters are organized as tensors, which is often thought of as a generalised matrix. Every tensor has a rank −

-

Tensor of rank 0 is a scalar.

-

Tensor of rank 1 is a vector.

-

Tensor of rank 2 is amatrix.

Here, these different dimensions are referred as axes.

Static axes and Dynamic axes

As the name implies, the static axes have the same length throughout the network’s life. On the other hand, the length of dynamic axes can vary from instance to instance. In fact, their length is typically not known before each minibatch is presented.

Dynamic axes are like static axes because they also define a meaningful grouping of the numbers contained in the tensor.

Example

To make it clearer, let’s see how a minibatch of short video clips is represented in CNTK. Suppose that the resolution of video clips is all 640 * 480. And, also the clips are shot in color which is typically encoded with three channels. It further means that our minibatch has the following −

-

3 static axes of length 640, 480 and 3 respectively.

-

Two dynamic axes; the length of the video and the minibatch axes.

It means that if a minibatch is having 16 videos each of which is 240 frames long, would be represented as 16*240*3*640*480 tensors.

Working with sequences in CNTK

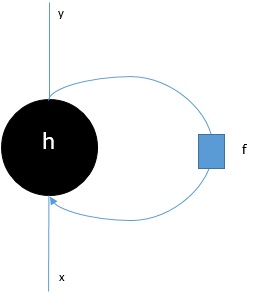

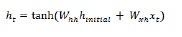

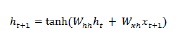

Let us understand sequences in CNTK by first learning about Long-Short Term Memory Network.

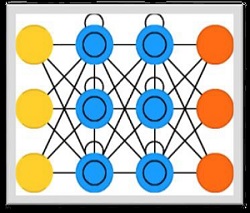

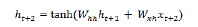

Long-Short Term Memory Network (LSTM)

Long-short term memory (LSTMs) networks were introduced by Hochreiter & Schmidhuber. It solved the problem of getting a basic recurrent layer to remember things for a long time. The architecture of LSTM is given above in the diagram. As we can see it has input neurons, memory cells, and output neurons. In order to combat the vanishing gradient problem, Long-short term memory networks use an explicit memory cell (stores the previous values) and the following gates −

-

Forget gate − As the name implies, it tells the memory cell to forget the previous values. The memory cell stores the values until the gate i.e. ‘forget gate’ tells it to forget them.

-

Input gate − As name implies, it adds new stuff to the cell.

-

Output gate − As name implies, output gate decides when to pass along the vectors from the cell to the next hidden state.

It is very easy to work with sequences in CNTK. Let’s see it with the help of following example −

import sys

import os

from cntk import Trainer, Axis

from cntk.io import MinibatchSource, CTFDeserializer, StreamDef, StreamDefs,

INFINITELY_REPEAT

from cntk.learners import sgd, learning_parameter_schedule_per_sample

from cntk import input_variable, cross_entropy_with_softmax,

classification_error, sequence

from cntk.logging import ProgressPrinter

from cntk.layers import Sequential, Embedding, Recurrence, LSTM, Dense

def create_reader(path, is_training, input_dim, label_dim):

return MinibatchSource(CTFDeserializer(path, StreamDefs(

features=StreamDef(field=''x'', shape=input_dim, is_sparse=True),

labels=StreamDef(field=''y'', shape=label_dim, is_sparse=False)

)), randomize=is_training,

max_sweeps=INFINITELY_REPEAT if is_training else 1)

def LSTM_sequence_classifier_net(input, num_output_classes, embedding_dim,

LSTM_dim, cell_dim):

lstm_classifier = Sequential([Embedding(embedding_dim),

Recurrence(LSTM(LSTM_dim, cell_dim)),

sequence.last,

Dense(num_output_classes)])

return lstm_classifier(input)

def train_sequence_classifier():

input_dim = 2000

cell_dim = 25

hidden_dim = 25

embedding_dim = 50

num_output_classes = 5

features = sequence.input_variable(shape=input_dim, is_sparse=True)

label = input_variable(num_output_classes)

classifier_output = LSTM_sequence_classifier_net(

features, num_output_classes, embedding_dim, hidden_dim, cell_dim)

ce = cross_entropy_with_softmax(classifier_output, label)

pe = classification_error(classifier_output, label)

rel_path = ("../../../Tests/EndToEndTests/Text/" +

"SequenceClassification/Data/Train.ctf")

path = os.path.join(os.path.dirname(os.path.abspath(__file__)), rel_path)

reader = create_reader(path, True, input_dim, num_output_classes)

input_map = {

features: reader.streams.features,

label: reader.streams.labels

}

lr_per_sample = learning_parameter_schedule_per_sample(0.0005)

progress_printer = ProgressPrinter(0)

trainer = Trainer(classifier_output, (ce, pe),

sgd(classifier_output.parameters, lr=lr_per_sample),progress_printer)

minibatch_size = 200

for i in range(255):

mb = reader.next_minibatch(minibatch_size, input_map=input_map)

trainer.train_minibatch(mb)

evaluation_average = float(trainer.previous_minibatch_evaluation_average)

loss_average = float(trainer.previous_minibatch_loss_average)

return evaluation_average, loss_average

if __name__ == ''__main__'':

error, _ = train_sequence_classifier()

print(" error: %f" % error)

average since average since examples loss last metric last ------------------------------------------------------ 1.61 1.61 0.886 0.886 44 1.61 1.6 0.714 0.629 133 1.6 1.59 0.56 0.448 316 1.57 1.55 0.479 0.41 682 1.53 1.5 0.464 0.449 1379 1.46 1.4 0.453 0.441 2813 1.37 1.28 0.45 0.447 5679 1.3 1.23 0.448 0.447 11365 error: 0.333333

The detailed explanation of the above program will be covered in next sections, especially when we will be constructing Recurrent Neural networks.

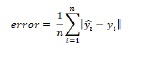

CNTK – Logistic Regression Model

This chapter deals with constructing a logistic regression model in CNTK.

Basics of Logistic Regression model

Logistic Regression, one of the simplest ML techniques, is a technique especially for binary classification. In other words, to create a prediction model in situations where the value of the variable to predict can be one of just two categorical values. One of the simplest examples of Logistic Regression is to predict whether the person is male or female, based on person’s age, voice, hairs and so on.

Example

Let’s understand the concept of Logistic Regression mathematically with the help of another example −

Suppose, we want to predict the credit worthiness of a loan application; 0 means reject, and 1 means approve, based on applicant debt , income and credit rating. We represent debt with X1, income with X2 and credit rating with X3.

In Logistic Regression, we determine a weight value, represented by w, for every feature and a single bias value, represented by b.

Now suppose,

X1 = 3.0 X2 = -2.0 X3 = 1.0

And suppose we determine weight and bias as follows −

W1 = 0.65, W2 = 1.75, W3 = 2.05 and b = 0.33

Now, for predicting the class, we need to apply the following formula −

Z = (X1*W1)+(X2*W2)+(X3+W3)+b i.e. Z = (3.0)*(0.65) + (-2.0)*(1.75) + (1.0)*(2.05) + 0.33 = 0.83

Next, we need to compute P = 1.0/(1.0 + exp(-Z)). Here, the exp() function is Euler’s number.

P = 1.0/(1.0 + exp(-0.83) = 0.6963

The P value can be interpreted as the probability that the class is 1. If P = 0.5) is class = 1.

To determine the values of weight and bias, we must obtain a set of training data having the known input predictor values and known correct class labels values. After that, we can use an algorithm, generally Gradient Descent, in order to find the values of weight and bias.

LR model implementation example

For this LR model, we are going to use the following data set −

1.0, 2.0, 0 3.0, 4.0, 0 5.0, 2.0, 0 6.0, 3.0, 0 8.0, 1.0, 0 9.0, 2.0, 0 1.0, 4.0, 1 2.0, 5.0, 1 4.0, 6.0, 1 6.0, 5.0, 1 7.0, 3.0, 1 8.0, 5.0, 1

To start this LR model implementation in CNTK, we need to first import the following packages −

import numpy as np import cntk as C

The program is structured with main() function as follows −

def main():

print("Using CNTK version = " + str(C.__version__) + "n")

Now, we need to load the training data into memory as follows −

data_file = ".\dataLRmodel.txt"

print("Loading data from " + data_file + "n")

features_mat = np.loadtxt(data_file, dtype=np.float32, delimiter=",", skiprows=0, usecols=[0,1])

labels_mat = np.loadtxt(data_file, dtype=np.float32, delimiter=",", skiprows=0, usecols=[2], ndmin=2)

Now, we will be creating a training program that creates a logistic regression model which is compatible with the training data −

features_dim = 2 labels_dim = 1 X = C.ops.input_variable(features_dim, np.float32) y = C.input_variable(labels_dim, np.float32) W = C.parameter(shape=(features_dim, 1)) # trainable cntk.Parameter b = C.parameter(shape=(labels_dim)) z = C.times(X, W) + b p = 1.0 / (1.0 + C.exp(-z)) model = p

Now, we need to create Lerner and trainer as follows −

ce_error = C.binary_cross_entropy(model, y) # CE a bit more principled for LR fixed_lr = 0.010 learner = C.sgd(model.parameters, fixed_lr) trainer = C.Trainer(model, (ce_error), [learner]) max_iterations = 4000

LR Model training

Once, we have created the LR model, next, it is time to start the training process −

np.random.seed(4)

N = len(features_mat)

for i in range(0, max_iterations):

row = np.random.choice(N,1) # pick a random row from training items

trainer.train_minibatch({ X: features_mat[row], y: labels_mat[row] })

if i % 1000 == 0 and i > 0:

mcee = trainer.previous_minibatch_loss_average

print(str(i) + " Cross-entropy error on curr item = %0.4f " % mcee)

Now, with the help of the following code, we can print the model weights and bias −

np.set_printoptions(precision=4, suppress=True)

print("Model weights: ")

print(W.value)

print("Model bias:")

print(b.value)

print("")

if __name__ == "__main__":

main()

Training a Logistic Regression model – Complete example

import numpy as np

import cntk as C

def main():

print("Using CNTK version = " + str(C.__version__) + "n")

data_file = ".\dataLRmodel.txt" # provide the name and the location of data file

print("Loading data from " + data_file + "n")

features_mat = np.loadtxt(data_file, dtype=np.float32, delimiter=",", skiprows=0, usecols=[0,1])

labels_mat = np.loadtxt(data_file, dtype=np.float32, delimiter=",", skiprows=0, usecols=[2], ndmin=2)

features_dim = 2

labels_dim = 1

X = C.ops.input_variable(features_dim, np.float32)

y = C.input_variable(labels_dim, np.float32)

W = C.parameter(shape=(features_dim, 1)) # trainable cntk.Parameter

b = C.parameter(shape=(labels_dim))

z = C.times(X, W) + b

p = 1.0 / (1.0 + C.exp(-z))

model = p

ce_error = C.binary_cross_entropy(model, y) # CE a bit more principled for LR

fixed_lr = 0.010

learner = C.sgd(model.parameters, fixed_lr)

trainer = C.Trainer(model, (ce_error), [learner])

max_iterations = 4000

np.random.seed(4)

N = len(features_mat)

for i in range(0, max_iterations):

row = np.random.choice(N,1) # pick a random row from training items

trainer.train_minibatch({ X: features_mat[row], y: labels_mat[row] })

if i % 1000 == 0 and i > 0:

mcee = trainer.previous_minibatch_loss_average

print(str(i) + " Cross-entropy error on curr item = %0.4f " % mcee)

np.set_printoptions(precision=4, suppress=True)

print("Model weights: ")

print(W.value)

print("Model bias:")

print(b.value)

if __name__ == "__main__":

main()

Output

Using CNTK version = 2.7 1000 cross entropy error on curr item = 0.1941 2000 cross entropy error on curr item = 0.1746 3000 cross entropy error on curr item = 0.0563 Model weights: [-0.2049] [0.9666]] Model bias: [-2.2846]

Prediction using trained LR Model

Once the LR model has been trained, we can use it for prediction as follows −

First of all, our evaluation program imports the numpy package and loads the training data into a feature matrix and a class label matrix in the same way as the training program we implement above −

import numpy as np def main(): data_file = ".\dataLRmodel.txt" # provide the name and the location of data file features_mat = np.loadtxt(data_file, dtype=np.float32, delimiter=",", skiprows=0, usecols=(0,1)) labels_mat = np.loadtxt(data_file, dtype=np.float32, delimiter=",", skiprows=0, usecols=[2], ndmin=2)

Next, it is time to set the values of the weights and the bias that were determined by our training program −

print("Setting weights and bias values n")

weights = np.array([0.0925, 1.1722], dtype=np.float32)

bias = np.array([-4.5400], dtype=np.float32)

N = len(features_mat)

features_dim = 2

Next our evaluation program will compute the logistic regression probability by walking through each training items as follows −

print("item pred_prob pred_label act_label result")

for i in range(0, N): # each item

x = features_mat[i]

z = 0.0

for j in range(0, features_dim):

z += x[j] * weights[j]

z += bias[0]

pred_prob = 1.0 / (1.0 + np.exp(-z))

pred_label = 0 if pred_prob

Now let us demonstrate how to do prediction −

x = np.array([9.5, 4.5], dtype=np.float32)

print("nPredicting class for age, education = ")

print(x)

z = 0.0

for j in range(0, features_dim):

z += x[j] * weights[j]

z += bias[0]

p = 1.0 / (1.0 + np.exp(-z))

print("Predicted p = " + str(p))

if p

Complete prediction evaluation program

import numpy as np

def main():

data_file = ".\dataLRmodel.txt" # provide the name and the location of data file

features_mat = np.loadtxt(data_file, dtype=np.float32, delimiter=",",

skiprows=0, usecols=(0,1))

labels_mat = np.loadtxt(data_file, dtype=np.float32, delimiter=",",

skiprows=0, usecols=[2], ndmin=2)

print("Setting weights and bias values n")

weights = np.array([0.0925, 1.1722], dtype=np.float32)

bias = np.array([-4.5400], dtype=np.float32)

N = len(features_mat)

features_dim = 2

print("item pred_prob pred_label act_label result")

for i in range(0, N): # each item

x = features_mat[i]

z = 0.0

for j in range(0, features_dim):

z += x[j] * weights[j]

z += bias[0]

pred_prob = 1.0 / (1.0 + np.exp(-z))

pred_label = 0 if pred_prob

Output

Setting weights and bias values.

Item pred_prob pred_label act_label result

0 0.3640 0 0 correct

1 0.7254 1 0 WRONG

2 0.2019 0 0 correct

3 0.3562 0 0 correct

4 0.0493 0 0 correct

5 0.1005 0 0 correct

6 0.7892 1 1 correct

7 0.8564 1 1 correct

8 0.9654 1 1 correct

9 0.7587 1 1 correct

10 0.3040 0 1 WRONG

11 0.7129 1 1 correct

Predicting class for age, education =

[9.5 4.5]

Predicting p = 0.526487952

Predicting class = 1

CNTK - Neural Network (NN) Concepts

This chapter deals with concepts of Neural Network with regards to CNTK.

As we know that, several layers of neurons are used for making a neural network. But, the question arises that in CNTK how we can model the layers of a NN? It can be done with the help of layer functions defined in the layer module.

Layer function

Actually, in CNTK, working with the layers has a distinct functional programming feel to it. Layer function looks like a regular function and it produces a mathematical function with a set of predefined parameters. Let’s see how we can create the most basic layer type, Dense, with the help of layer function.

Example

With the help of following basic steps, we can create the most basic layer type −

Step 1 − First, we need to import the Dense layer function from the layers’ package of CNTK.

from cntk.layers import Dense

Step 2 − Next from the CNTK root package, we need to import the input_variable function.

from cntk import input_variable

Step 3 − Now, we need to create a new input variable using the input_variable function. We also need to provide the its size.

feature = input_variable(100)

Step 4 − At last, we will create a new layer using Dense function along with providing the number of neurons we want.

layer = Dense(40)(feature)

Now, we can invoke the configured Dense layer function to connect the Dense layer to the input.

Complete implementation example

from cntk.layers import Dense

from cntk import input_variable

feature= input_variable(100)

layer = Dense(40)(feature)

Customizing layers

As we have seen CNTK provides us with a pretty good set of defaults for building NNs. Based on activation function and other settings we choose, the behavior as well as performance of the NN is different. It is another very useful stemming algorithm. That’s the reason, it is good to understand what we can configure.

Steps to configure a Dense layer

Each layer in NN has its unique configuration options and when we talk about Dense layer, we have following important settings to define −

-

shape − As name implies, it defines the output shape of the layer which further determines the number of neurons in that layer.

-

activation − It defines the activation function of that layer, so it can transform the input data.

-

init − It defines the initialisation function of that layer. It will initialise the parameters of the layer when we start training the NN.

Let’s see the steps with the help of which we can configure a Dense layer −

Step1 − First, we need to import the Dense layer function from the layers’ package of CNTK.

from cntk.layers import Dense

Step2 − Next from the CNTK ops package, we need to import the sigmoid operator. It will be used to configure as an activation function.

from cntk.ops import sigmoid

Step3 − Now, from initializer package, we need to import the glorot_uniform initializer.

from cntk.initializer import glorot_uniform

Step4 − At last, we will create a new layer using Dense function along with providing the number of neurons as the first argument. Also, provide the sigmoid operator as activation function and the glorot_uniform as the init function for the layer.

layer = Dense(50, activation = sigmoid, init = glorot_uniform)

Complete implementation example −

from cntk.layers import Dense from cntk.ops import sigmoid from cntk.initializer import glorot_uniform layer = Dense(50, activation = sigmoid, init = glorot_uniform)

Optimizing the parameters

Till now, we have seen how to create the structure of a NN and how to configure various settings. Here, we will see, how we can optimise the parameters of a NN. With the help of the combination of two components namely learners and trainers, we can optimise the parameters of a NN.

trainer component

The first component which is used to optimise the parameters of a NN is trainer component. It basically implements the backpropagation process. If we talk about its working, it passes the data through the NN to obtain a prediction.

After that, it uses another component called learner in order to obtain the new values for the parameters in a NN. Once it obtains the new values, it applies these new values and repeat the process until an exit criterion is met.

learner component

The second component which is used to optimise the parameters of a NN is learner component, which is basically responsible for performing the gradient descent algorithm.

Learners included in the CNTK library

Following is the list of some of the interesting learners included in CNTK library −

-

Stochastic Gradient Descent (SGD) − This learner represents the basic stochastic gradient descent, without any extras.

-

Momentum Stochastic Gradient Descent (MomentumSGD) − With SGD, this learner applies the momentum to overcome the problem of local maxima.

-

RMSProp − This learner, in order to control the rate of descent, uses decaying learning rates.

-

Adam − This learner, in order to decrease the rate of descent over time, uses decaying momentum.

-

Adagrad − This learner, for frequently as well as infrequently occurring features, uses different learning rates.

CNTK – Creating First Neural Network

This chapter will elaborate on creating a neural network in CNTK.

Build the network structure

In order to apply CNTK concepts to build our first NN, we are going to use NN to classify species of iris flowers based on the physical properties of sepal width and length, and petal width and length. The dataset which we will be using iris dataset that describes the physical properties of different varieties of iris flowers −

- Sepal length

- Sepal width

- Petal length

- Petal width

- Class i.e. iris setosa or iris versicolor or iris virginica

Here, we will be building a regular NN called a feedforward NN. Let us see the implementation steps to build the structure of NN −

Step 1 − First, we will import the necessary components such as our layer types, activation functions, and a function that allows us to define an input variable for our NN, from CNTK library.

from cntk import default_options, input_variable from cntk.layers import Dense, Sequential from cntk.ops import log_softmax, relu

Step 2 − After that, we will create our model using sequential function. Once created, we will feed it with the layers we want. Here, we are going to create two distinct layers in our NN; one with four neurons and another with three neurons.

model = Sequential([Dense(4, activation=relu), Dense(3, activation=log_sogtmax)])

Step 3 − At last, in order to compile the NN, we will bind the network to the input variable. It has an input layer with four neurons and an output layer with three neurons.

feature= input_variable(4) z = model(feature)

Applying an activation function

There are lots of activation functions to choose from and choosing the right activation function will definitely make a big difference to how well our deep learning model will perform.

At the output layer

Choosing an activation function at the output layer will depend upon the kind of problem we are going to solve with our model.

-

For a regression problem, we should use a linear activation function on the output layer.

-

For a binary classification problem, we should use a sigmoid activation function on the output layer.

-

For multi-class classification problem, we should use a softmax activation function on the output layer.

-

Here, we are going to build a model for predicting one of the three classes. It means we need to use softmax activation function at output layer.

At the hidden layer

Choosing an activation function at the hidden layer requires some experimentation for monitoring the performance to see which activation function works well.

-

In a classification problem, we need to predict the probability a sample belongs to a specific class. That’s why we need an activation function that gives us probabilistic values. To reach this goal, sigmoid activation function can help us.

-

One of the major problems associated with sigmoid function is vanishing gradient problem. To overcome such problem, we can use ReLU activation function that coverts all negative values to zero and works as a pass-through filter for positive values.

Picking a loss function

Once, we have the structure for our NN model, we must have to optimise it. For optimising we need a loss function. Unlike activation functions, we have very less loss functions to choose from. However, choosing a loss function will depend upon the kind of problem we are going to solve with our model.

For example, in a classification problem, we should use a loss function that can measure the difference between a predicted class and an actual class.

loss function

For the classification problem, we are going to solve with our NN model, categorical cross entropy loss function is the best candidate. In CNTK, it is implemented as cross_entropy_with_softmax which can be imported from cntk.losses package, as follows−

label= input_variable(3) loss = cross_entropy_with_softmax(z, label)

Metrics

With having the structure for our NN model and a loss function to apply, we have all the ingredients to start making the recipe for optimising our deep learning model. But, before getting deep dive into this, we should learn about metrics.

cntk.metrics

CNTK has the package named cntk.metrics from which we can import the metrics we are going to use. As we are building a classification model, we will be using classification_error matric that will produce a number between 0 and 1. The number between 0 and 1 indicates the percentage of samples correctly predicted −

First, we need to import the metric from cntk.metrics package −

from cntk.metrics import classification_error error_rate = classification_error(z, label)

The above function actually needs the output of the NN and the expected label as input.

CNTK – Training the Neural Network

Here, we will understand about training the Neural Network in CNTK.

Training a model in CNTK

In the previous section, we have defined all the components for the deep learning model. Now it is time to train it. As we discussed earlier, we can train a NN model in CNTK using the combination of learner and trainer.

Choosing a learner and setting up training

In this section, we will be defining the learner. CNTK provides several learners to choose from. For our model, defined in previous sections, we will be using Stochastic Gradient Descent (SGD) learner.

In order to train the neural network, let us configure the learner and trainer with the help of following steps −

Step 1 − First, we need to import sgd function from cntk.lerners package.

from cntk.learners import sgd

Step 2 − Next, we need to import Trainer function from cntk.train.trainer package.

from cntk.train.trainer import Trainer

Step 3 − Now, we need to create a learner. It can be created by invoking sgd function along with providing model’s parameters and a value for the learning rate.

learner = sgd(z.parametrs, 0.01)

Step 4 − At last, we need to initialize the trainer. It must be provided the network, the combination of the loss and metric along with the learner.

trainer = Trainer(z, (loss, error_rate), [learner])

The learning rate which controls the speed of optimisation should be small number between 0.1 to 0.001.

Choosing a learner and setting up the training – Complete example

from cntk.learners import sgd from cntk.train.trainer import Trainer learner = sgd(z.parametrs, 0.01) trainer = Trainer(z, (loss, error_rate), [learner])

Feeding data into the trainer

Once we chose and configured the trainer, it is time to load the dataset. We have saved the iris dataset as a .CSV file and we will be using data wrangling package named pandas to load the dataset.

Steps to load the dataset from .CSV file

Step 1 − First, we need to import the pandas package.

from import pandas as pd

Step 2 − Now, we need to invoke the function named read_csv function to load the .csv file from the disk.

df_source = pd.read_csv(‘iris.csv’, names = [‘sepal_length’, ‘sepal_width’, ‘petal_length’, ‘petal_width’, index_col=False)

Once we load the dataset, we need to split it into a set of features and a label.

Steps to split the dataset into features and label

Step 1 − First, we need to select all rows and first four columns from the dataset. It can be done by using iloc function.

x = df_source.iloc[:, :4].values

Step 2 − Next we need to select the species column from iris dataset. We will be using the values property to access the underlying numpy array.

x = df_source[‘species’].values

Steps to encode the species column to a numeric vector representation

As we discussed earlier, our model is based on classification, it requires numeric input values. Hence, here we need to encode the species column to a numeric vector representation. Let’s see the steps to do it −

Step 1 − First, we need to create a list expression to iterate over all elements in the array. Then perform a look up in the label_mapping dictionary for each value.

label_mapping = {‘Iris-Setosa’ : 0, ‘Iris-Versicolor’ : 1, ‘Iris-Virginica’ : 2}

Step 2 − Next, convert this converted numeric value to a one-hot encoded vector. We will be using one_hot function as follows −

def one_hot(index, length): result = np.zeros(length) result[index] = 1 return result

Step 3 − At last, we need to turn this converted list into a numpy array.

y = np.array([one_hot(label_mapping[v], 3) for v in y])

Steps to detect overfitting

The situation, when your model remembers samples but can’t deduce rules from the training samples, is overfitting. With the help of following steps, we can detect overfitting on our model −

Step 1 − First, from sklearn package, import the train_test_split function from the model_selection module.

from sklearn.model_selection import train_test_split

Step 2 − Next, we need to invoke the train_test_split function with features x and labels y as follows −

x_train, x_test, y_train, y_test = train_test_split(X, y, test_size=0-2, stratify=y)

We specified a test_size of 0.2 to set aside 20% of total data.

label_mapping = {‘Iris-Setosa’ : 0, ‘Iris-Versicolor’ : 1, ‘Iris-Virginica’ : 2}

Steps to feed training set and validation set to our model

Step 1 − In order to train our model, first, we will be invoking the train_minibatch method. Then give it a dictionary that maps the input data to the input variable that we have used to define the NN and its associated loss function.

trainer.train_minibatch({ features: X_train, label: y_train})

Step 2 − Next, call train_minibatch by using the following for loop −

for _epoch in range(10):

trainer.train_minbatch ({ feature: X_train, label: y_train})

print(‘Loss: {}, Acc: {}’.format(

trainer.previous_minibatch_loss_average,

trainer.previous_minibatch_evaluation_average))

Feeding data into the trainer – Complete example

from import pandas as pd

df_source = pd.read_csv(‘iris.csv’, names = [‘sepal_length’, ‘sepal_width’, ‘petal_length’, ‘petal_width’, index_col=False)

x = df_source.iloc[:, :4].values

x = df_source[‘species’].values

label_mapping = {‘Iris-Setosa’ : 0, ‘Iris-Versicolor’ : 1, ‘Iris-Virginica’ : 2}

def one_hot(index, length):

result = np.zeros(length)

result[index] = 1

return result

y = np.array([one_hot(label_mapping[v], 3) for v in y])

from sklearn.model_selection import train_test_split

x_train, x_test, y_train, y_test = train_test_split(X, y, test_size=0-2, stratify=y)

label_mapping = {‘Iris-Setosa’ : 0, ‘Iris-Versicolor’ : 1, ‘Iris-Virginica’ : 2}

trainer.train_minibatch({ features: X_train, label: y_train})

for _epoch in range(10):

trainer.train_minbatch ({ feature: X_train, label: y_train})

print(‘Loss: {}, Acc: {}’.format(

trainer.previous_minibatch_loss_average,

trainer.previous_minibatch_evaluation_average))

Measuring the performance of NN

In order to optimise our NN model, whenever we pass data through the trainer, it measures the performance of the model through the metric that we configured for trainer. Such measurement of performance of NN model during training is on training data. But on the other hand, for a full analysis of the model performance we need to use test data as well.

So, to measure the performance of the model using the test data, we can invoke the test_minibatch method on the trainer as follows −

trainer.test_minibatch({ features: X_test, label: y_test})

Making prediction with NN

Once you trained a deep learning model, the most important thing is to make predictions using that. In order to make prediction from the above trained NN, we can follow the given steps−

Step 1 − First, we need to pick a random item from the test set using the following function −

np.random.choice

Step 2 − Next, we need to select the sample data from the test set by using sample_index.

Step 3 − Now, in order to convert the numeric output to the NN to an actual label, create an inverted mapping.

Step 4 − Now, use the selected sample data. Make a prediction by invoking the NN z as a function.

Step 5 − Now, once you got the predicted output, take the index of the neuron that has the highest value as the predicted value. It can be done by using the np.argmax function from the numpy package.

Step 6 − At last, convert the index value into the real label by using inverted_mapping.

Making prediction with NN – Complete example

sample_index = np.random.choice(X_test.shape[0])

sample = X_test[sample_index]

inverted_mapping = {

1:’Iris-setosa’,

2:’Iris-versicolor’,

3:’Iris-virginica’

}

prediction = z(sample)

predicted_label = inverted_mapping[np.argmax(prediction)]

print(predicted_label)

Output

After training the above deep learning model and running it, you will get the following output −

Iris-versicolor

CNTK – In-Memory and Large Datasets

In this chapter, we will learn about how to work with the in-memory and large datasets in CNTK.

Training with small in memory datasets

When we talk about feeding data into CNTK trainer, there can be many ways, but it will depend upon the size of the dataset and format of the data. The data sets can be small in-memory or large datasets.

In this section, we are going to work with in-memory datasets. For this, we will use the following two frameworks −

- Numpy

- Pandas

Using Numpy arrays

Here, we will work with a numpy based randomly generated dataset in CNTK. In this example, we are going to simulate data for a binary classification problem. Suppose, we have a set of observations with 4 features and want to predict two possible labels with our deep learning model.

Implementation Example

For this, first we must generate a set of labels containing a one-hot vector representation of the labels, we want to predict. It can be done with the help of following steps −

Step 1 − Import the numpy package as follows −

import numpy as np num_samples = 20000

Step 2 − Next, generate a label mapping by using np.eye function as follows −

label_mapping = np.eye(2)

Step 3 − Now by using np.random.choice function, collect the 20000 random samples as follows −

y = label_mapping[np.random.choice(2,num_samples)].astype(np.float32)

Step 4 − Now at last by using np.random.random function, generate an array of random floating point values as follows −

x = np.random.random(size=(num_samples, 4)).astype(np.float32)

Once, we generate an array of random floating-point values, we need to convert them to 32-bit floating point numbers so that it can be matched to the format expected by CNTK. Let’s follow the steps below to do this −

Step 5 − Import the Dense and Sequential layer functions from cntk.layers module as follows −

from cntk.layers import Dense, Sequential

Step 6 − Now, we need to import the activation function for the layers in the network. Let us import the sigmoid as activation function −

from cntk import input_variable, default_options from cntk.ops import sigmoid

Step 7 − Now, we need to import the loss function to train the network. Let us import binary_cross_entropy as loss function −

from cntk.losses import binary_cross_entropy

Step 8 − Next, we need to define the default options for the network. Here, we will be providing the sigmoid activation function as a default setting. Also, create the model by using Sequential layer function as follows −

with default_options(activation=sigmoid): model = Sequential([Dense(6),Dense(2)])

Step 9 − Next, initialise an input_variable with 4 input features serving as the input for the network.

features = input_variable(4)

Step 10 − Now, in order to complete it, we need to connect features variable to the NN.

z = model(features)

So, now we have a NN, with the help of following steps, let us train it using in-memory dataset −

Step 11 − To train this NN, first we need to import learner from cntk.learners module. We will import sgd learner as follows −

from cntk.learners import sgd

Step 12 − Along with that import the ProgressPrinter from cntk.logging module as well.

from cntk.logging import ProgressPrinter progress_writer = ProgressPrinter(0)

Step 13 − Next, define a new input variable for the labels as follows −

labels = input_variable(2)

Step 14 − In order to train the NN model, next, we need to define a loss using the binary_cross_entropy function. Also, provide the model z and the labels variable.

loss = binary_cross_entropy(z, labels)

Step 15 − Next, initialize the sgd learner as follows −

learner = sgd(z.parameters, lr=0.1)

Step 16 − At last, call the train method on the loss function. Also, provide it with the input data, the sgd learner and the progress_printer.−

training_summary=loss.train((x,y),parameter_learners=[learner],callbacks=[progress_writer])

Complete implementation example

import numpy as np num_samples = 20000 label_mapping = np.eye(2) y = label_mapping[np.random.choice(2,num_samples)].astype(np.float32) x = np.random.random(size=(num_samples, 4)).astype(np.float32) from cntk.layers import Dense, Sequential from cntk import input_variable, default_options from cntk.ops import sigmoid from cntk.losses import binary_cross_entropy with default_options(activation=sigmoid): model = Sequential([Dense(6),Dense(2)]) features = input_variable(4) z = model(features) from cntk.learners import sgd from cntk.logging import ProgressPrinter progress_writer = ProgressPrinter(0) labels = input_variable(2) loss = binary_cross_entropy(z, labels) learner = sgd(z.parameters, lr=0.1) training_summary=loss.train((x,y),parameter_learners=[learner],callbacks=[progress_writer])

Output

Build info:

Built time: *** ** **** 21:40:10

Last modified date: *** *** ** 21:08:46 2019

Build type: Release

Build target: CPU-only

With ASGD: yes

Math lib: mkl

Build Branch: HEAD

Build SHA1:ae9c9c7c5f9e6072cc9c94c254f816dbdc1c5be6 (modified)

MPI distribution: Microsoft MPI

MPI version: 7.0.12437.6

-------------------------------------------------------------------

average since average since examples

loss last metric last

------------------------------------------------------

Learning rate per minibatch: 0.1

1.52 1.52 0 0 32

1.51 1.51 0 0 96

1.48 1.46 0 0 224

1.45 1.42 0 0 480

1.42 1.4 0 0 992

1.41 1.39 0 0 2016

1.4 1.39 0 0 4064

1.39 1.39 0 0 8160

1.39 1.39 0 0 16352

Using Pandas DataFrames

Numpy arrays are very limited in what they can contain and one of the most basic ways of storing data. For example, a single n-dimensional array can contain data of a single data type. But on the other hand, for many real-world cases we need a library that can handle more than one data type in a single dataset.

One of the Python libraries called Pandas makes it easier to work with such kind of datasets. It introduces the concept of a DataFrame (DF) and allows us to load datasets from disk stored in various formats as DFs. For example, we can read DFs stored as CSV, JSON, Excel, etc.

You can learn Python Pandas library in more detail at

Implementation Example

In this example, we are going to use the example of classifying three possible species of the iris flowers based on four properties. We have created this deep learning model in the previous sections too. The model is as follows −

from cntk.layers import Dense, Sequential from cntk import input_variable, default_options from cntk.ops import sigmoid, log_softmax from cntk.losses import binary_cross_entropy model = Sequential([ Dense(4, activation=sigmoid), Dense(3, activation=log_softmax) ]) features = input_variable(4) z = model(features)

The above model contains one hidden layer and an output layer with three neurons to match the number of classes we can predict.

Next, we will use the train method and loss function to train the network. For this, first we must load and preprocess the iris dataset, so that it matches the expected layout and data format for the NN. It can be done with the help of following steps −

Step 1 − Import the numpy and Pandas package as follows −

import numpy as np import pandas as pd

Step 2 − Next, use the read_csv function to load the dataset into memory −

df_source = pd.read_csv(‘iris.csv’, names = [‘sepal_length’, ‘sepal_width’, ‘petal_length’, ‘petal_width’, ‘species’], index_col=False)

Step 3 − Now, we need to create a dictionary that will be mapping the labels in the dataset with their corresponding numeric representation.

label_mapping = {‘Iris-Setosa’ : 0, ‘Iris-Versicolor’ : 1, ‘Iris-Virginica’ : 2}

Step 4 − Now, by using iloc indexer on the DataFrame, select the first four columns as follows −

x = df_source.iloc[:, :4].values

Step 5 −Next, we need to select the species columns as the labels for the dataset. It can be done as follows −

y = df_source[‘species’].values

Step 6 − Now, we need to map the labels in the dataset, which can be done by using label_mapping. Also, use one_hot encoding to convert them into one-hot encoding arrays.

y = np.array([one_hot(label_mapping[v], 3) for v in y])

Step 7 − Next, to use the features and the mapped labels with CNTK, we need to convert them both to floats −

x= x.astype(np.float32) y= y.astype(np.float32)

As we know that, the labels are stored in the dataset as strings and CNTK cannot work with these strings. That’s the reason, it needs one-hot encoded vectors representing the labels. For this, we can define a function say one_hot as follows −

def one_hot(index, length): result = np.zeros(length) result[index] = index return result

Now, we have the numpy array in the correct format, with the help of following steps we can use them to train our model −

Step 8 − First, we need to import the loss function to train the network. Let us import binary_cross_entropy_with_softmax as loss function −

from cntk.losses import binary_cross_entropy_with_softmax

Step 9 − To train this NN, we also need to import learner from cntk.learners module. We will import sgd learner as follows −

from cntk.learners import sgd

Step 10 − Along with that import the ProgressPrinter from cntk.logging module as well.

from cntk.logging import ProgressPrinter progress_writer = ProgressPrinter(0)

Step 11 − Next, define a new input variable for the labels as follows −

labels = input_variable(3)

Step 12 − In order to train the NN model, next, we need to define a loss using the binary_cross_entropy_with_softmax function. Also provide the model z and the labels variable.

loss = binary_cross_entropy_with_softmax (z, labels)

Step 13 − Next, initialise the sgd learner as follows −

learner = sgd(z.parameters, 0.1)

Step 14 − At last, call the train method on the loss function. Also, provide it with the input data, the sgd learner and the progress_printer.

training_summary=loss.train((x,y),parameter_learners=[learner],callbacks= [progress_writer],minibatch_size=16,max_epochs=5)

Complete implementation example

from cntk.layers import Dense, Sequential

from cntk import input_variable, default_options

from cntk.ops import sigmoid, log_softmax

from cntk.losses import binary_cross_entropy

model = Sequential([

Dense(4, activation=sigmoid),

Dense(3, activation=log_softmax)

])

features = input_variable(4)

z = model(features)

import numpy as np

import pandas as pd

df_source = pd.read_csv(‘iris.csv’, names = [‘sepal_length’, ‘sepal_width’, ‘petal_length’, ‘petal_width’, ‘species’], index_col=False)

label_mapping = {‘Iris-Setosa’ : 0, ‘Iris-Versicolor’ : 1, ‘Iris-Virginica’ : 2}

x = df_source.iloc[:, :4].values

y = df_source[‘species’].values

y = np.array([one_hot(label_mapping[v], 3) for v in y])

x= x.astype(np.float32)

y= y.astype(np.float32)

def one_hot(index, length):

result = np.zeros(length)

result[index] = index

return result

from cntk.losses import binary_cross_entropy_with_softmax

from cntk.learners import sgd

from cntk.logging import ProgressPrinter

progress_writer = ProgressPrinter(0)

labels = input_variable(3)

loss = binary_cross_entropy_with_softmax (z, labels)

learner = sgd(z.parameters, 0.1)

training_summary=loss.train((x,y),parameter_learners=[learner],callbacks=[progress_writer],minibatch_size=16,max_epochs=5)

Output

Build info:

Built time: *** ** **** 21:40:10

Last modified date: *** *** ** 21:08:46 2019

Build type: Release

Build target: CPU-only

With ASGD: yes

Math lib: mkl

Build Branch: HEAD

Build SHA1:ae9c9c7c5f9e6072cc9c94c254f816dbdc1c5be6 (modified)

MPI distribution: Microsoft MPI

MPI version: 7.0.12437.6

-------------------------------------------------------------------

average since average since examples

loss last metric last

------------------------------------------------------

Learning rate per minibatch: 0.1

1.1 1.1 0 0 16

0.835 0.704 0 0 32

1.993 1.11 0 0 48

1.14 1.14 0 0 112

[………]

Training with large datasets

In the previous section, we worked with small in-memory datasets using Numpy and pandas, but not all datasets are so small. Specially the datasets containing images, videos, sound samples are large. MinibatchSource is a component, that can load data in chunks, provided by CNTK to work with such large datasets. Some of the features of MinibatchSource components are as follows −

-

MinibatchSource can prevent NN from overfitting by automatically randomize samples read from the data source.

-

It has built-in transformation pipeline which can be used to augment the data.

-

It loads the data on a background thread separate from the training process.

In the following sections, we are going to explore how to use a minibatch source with out-of-memory data to work with large datasets. We will also explore, how we can use it to feed for training a NN.

Creating MinibatchSource instance

In the previous section, we have used iris flower example and worked with small in-memory dataset using Pandas DataFrames. Here, we will be replacing the code that uses data from a pandas DF with MinibatchSource. First, we need to create an instance of MinibatchSource with the help of following steps −

Implementation Example

Step 1 − First, from cntk.io module import the components for the minibatchsource as follows −

from cntk.io import StreamDef, StreamDefs, MinibatchSource, CTFDeserializer, INFINITY_REPEAT

Step 2 − Now, by using StreamDef class, crate a stream definition for the labels.

labels_stream = StreamDef(field=’labels’, shape=3, is_sparse=False)

Step 3 − Next, create to read the features filed from the input file, create another instance of StreamDef as follows.

feature_stream = StreamDef(field=’features’, shape=4, is_sparse=False)

Step 4 − Now, we need to provide iris.ctf file as input and initialise the deserializer as follows −

deserializer = CTFDeserializer(‘iris.ctf’, StreamDefs(labels= label_stream, features=features_stream)

Step 5 − At last, we need to create instance of minisourceBatch by using deserializer as follows −

Minibatch_source = MinibatchSource(deserializer, randomize=True)

Creating a MinibatchSource instance – Complete implementation example

from cntk.io import StreamDef, StreamDefs, MinibatchSource, CTFDeserializer, INFINITY_REPEAT labels_stream = StreamDef(field=’labels’, shape=3, is_sparse=False) feature_stream = StreamDef(field=’features’, shape=4, is_sparse=False) deserializer = CTFDeserializer(‘iris.ctf’, StreamDefs(labels=label_stream, features=features_stream) Minibatch_source = MinibatchSource(deserializer, randomize=True)

Creating MCTF file

As you have seen above, we are taking the data from ‘iris.ctf’ file. It has the file format called CNTK Text Format(CTF). It is mandatory to create a CTF file to get the data for the MinibatchSource instance we created above. Let us see how we can create a CTF file.

Implementation Example

Step 1 − First, we need to import the pandas and numpy packages as follows −

import pandas as pd import numpy as np

Step 2 − Next, we need to load our data file, i.e. iris.csv into memory. Then, store it in the df_source variable.

df_source = pd.read_csv(‘iris.csv’, names = [‘sepal_length’, ‘sepal_width’, ‘petal_length’, ‘petal_width’, ‘species’], index_col=False)

Step 3 − Now, by using iloc indexer as the features, take the content of the first four columns. Also, use the data from species column as follows −

features = df_source.iloc[: , :4].values labels = df_source[‘species’].values

Step 4 − Next, we need to create a mapping between the label name and its numeric representation. It can be done by creating label_mapping as follows −

label_mapping = {‘Iris-Setosa’ : 0, ‘Iris-Versicolor’ : 1, ‘Iris-Virginica’ : 2}

Step 5 − Now, convert the labels to a set of one-hot encoded vectors as follows −

labels = [one_hot(label_mapping[v], 3) for v in labels]

Now, as we did before, create a utility function called one_hot to encode the labels. It can be done as follows −

def one_hot(index, length): result = np.zeros(length) result[index] = 1 return result

As, we have loaded and preprocessed the data, it’s time to store it on disk in the CTF file format. We can do it with the help of following Python code −

With open(‘iris.ctf’, ‘w’) as output_file:

for index in range(0, feature.shape[0]):

feature_values = ‘ ‘.join([str(x) for x in np.nditer(features[index])])

label_values = ‘ ‘.join([str(x) for x in np.nditer(labels[index])])

output_file.write(‘features {} | labels {} n’.format(feature_values, label_values))

Creating a MCTF file – Complete implementation example

import pandas as pd

import numpy as np

df_source = pd.read_csv(‘iris.csv’, names = [‘sepal_length’, ‘sepal_width’, ‘petal_length’, ‘petal_width’, ‘species’], index_col=False)

features = df_source.iloc[: , :4].values

labels = df_source[‘species’].values

label_mapping = {‘Iris-Setosa’ : 0, ‘Iris-Versicolor’ : 1, ‘Iris-Virginica’ : 2}

labels = [one_hot(label_mapping[v], 3) for v in labels]

def one_hot(index, length):

result = np.zeros(length)

result[index] = 1

return result

With open(‘iris.ctf’, ‘w’) as output_file:

for index in range(0, feature.shape[0]):

feature_values = ‘ ‘.join([str(x) for x in np.nditer(features[index])])

label_values = ‘ ‘.join([str(x) for x in np.nditer(labels[index])])

output_file.write(‘features {} | labels {} n’.format(feature_values, label_values))

Feeding the data

Once you create MinibatchSource, instance, we need to train it. We can use the same training logic as used when we worked with small in-memory datasets. Here, we will use MinibatchSource instance as the input for the train method on loss function as follows −

Implementation Example

Step 1 − In order to log the output of the training session, first import the ProgressPrinter from cntk.logging module as follows −

from cntk.logging import ProgressPrinter

Step 2 − Next, to set up the training session, import the trainer and training_session from cntk.train module as follows −

from cntk.train import Trainer,

Step 3 − Now, we need to define some set of constants like minibatch_size, samples_per_epoch and num_epochs as follows −

minbatch_size = 16 samples_per_epoch = 150 num_epochs = 30

Step 4 − Next, in order to know CNTK how to read data during training, we need to define a mapping between the input variable for the network and the streams in the minibatch source.

input_map = {

features: minibatch.source.streams.features,

labels: minibatch.source.streams.features

}

Step 5 − Next, to log the output of the training process, initialise the progress_printer variable with a new ProgressPrinter instance as follows −

progress_writer = ProgressPrinter(0)

Step 6 − At last, we need to invoke the train method on the loss as follows −

train_history = loss.train(minibatch_source, parameter_learners=[learner], model_inputs_to_streams=input_map, callbacks=[progress_writer], epoch_size=samples_per_epoch, max_epochs=num_epochs)

Feeding the data – Complete implementation example

from cntk.logging import ProgressPrinter

from cntk.train import Trainer, training_session

minbatch_size = 16

samples_per_epoch = 150

num_epochs = 30

input_map = {

features: minibatch.source.streams.features,

labels: minibatch.source.streams.features

}

progress_writer = ProgressPrinter(0)

train_history = loss.train(minibatch_source,

parameter_learners=[learner],

model_inputs_to_streams=input_map,

callbacks=[progress_writer],

epoch_size=samples_per_epoch,

max_epochs=num_epochs)

Output

------------------------------------------------------------------- average since average since examples loss last metric last ------------------------------------------------------ Learning rate per minibatch: 0.1 1.21 1.21 0 0 32 1.15 0.12 0 0 96 [………]

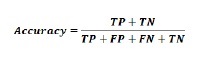

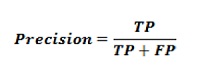

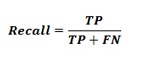

CNTK – Measuring Performance

This chapter will explain how to measure the model performance in CNKT.

Strategy to validate model performance

After building a ML model, we used to train it using a set of data samples. Because of this training our ML model learns and derive some general rules. The performance of ML model matters when we feed new samples, i.e., different samples than provided at the time of training, to the model. The model behaves differently in that case. It may be worse at making a good prediction on those new samples.

But the model must work well for new samples as well because in production environment we will get different input than we used sample data for training purpose. That’s the reason, we should validate the ML model by using a set of samples different from the samples we used for training purpose. Here, we are going to discuss two different techniques for creating a dataset for validating a NN.

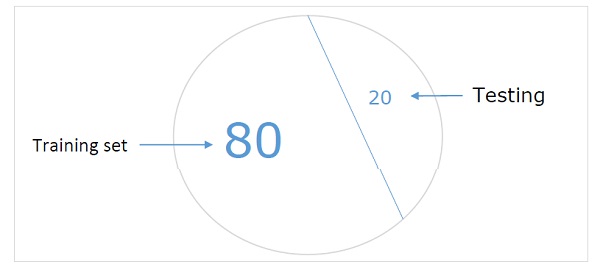

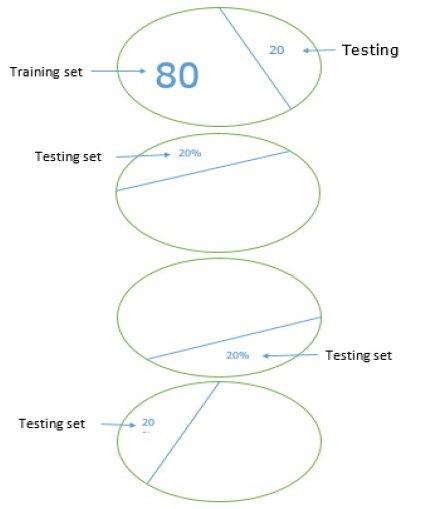

Hold-out dataset

It is one of the easiest methods for creating a dataset to validate a NN. As name implies, in this method we will be holding back one set of samples from training (say 20%) and using it to test the performance of our ML model. Following diagram shows the ratio between training and validation samples −

Hold-out dataset model ensures that we have enough data to train our ML model and at the same time we will have a reasonable number of samples to get good measurement of model’s performance.

In order to include in the training set and test set, it’s a good practice to choose random samples from the main dataset. It ensures an even distribution between training and test set.

Following is an example in which we are producing own hold-out dataset by using train_test_split function from the scikit-learn library.

Example

from sklearn.datasets import load_iris

iris = load_iris()

X = iris.data

y = iris.target

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=1)

# Here above test_size = 0.2 represents that we provided 20% of the data as test data.

from sklearn.neighbors import KNeighborsClassifier

from sklearn import metrics

classifier_knn = KNeighborsClassifier(n_neighbors=3)

classifier_knn.fit(X_train, y_train)

y_pred = classifier_knn.predict(X_test)

# Providing sample data and the model will make prediction out of that data

sample = [[5, 5, 3, 2], [2, 4, 3, 5]]

preds = classifier_knn.predict(sample)

pred_species = [iris.target_names[p] for p in preds] print("Predictions:", pred_species)

Output

Predictions: [''versicolor'', ''virginica'']

While using CNTK, we need to randomise the order of our dataset each time we train our model because −

-

Deep learning algorithms are highly influenced by the random-number generators.

-

The order in which we provide the samples to NN during training greatly affects its performance.

The major downside of using the hold-out dataset technique is that it is unreliable because sometimes we get very good results but sometimes, we get bad results.

K-fold cross validation

To make our ML model more reliable, there is a technique called K-fold cross validation. In nature K-fold cross validation technique is same as the previous technique, but it repeats it several times-usually about 5 to 10 times. Following diagram represents its concept −

Working of K-fold cross validation

The working of K-fold cross validation can be understood with the help of following steps −

Step 1 − Like in Hand-out dataset technique, in K-fold cross validation technique, first we need to split the dataset into a training and test set. Ideally, the ratio is 80-20, i.e. 80% of training set and 20% of test set.

Step 2 − Next, we need to train our model using the training set.

Step 3 −At last, we will be using the test set to measure the performance of our model. The only difference between Hold-out dataset technique and k-cross validation technique is that the above process gets repeated usually for 5 to 10 times and at the end the average is calculated over all the performance metrics. That average would be the final performance metrics.

Let us see an example with a small dataset −

Example

from numpy import array

from sklearn.model_selection import KFold

data = array([0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9, 1.0])

kfold = KFold(5, True, 1)

for train, test in kfold.split(data):

print(''train: %s, test: %s'' % (data[train],(data[test]))

Output

train: [0.1 0.2 0.4 0.5 0.6 0.7 0.8 0.9], test: [0.3 1. ] train: [0.1 0.2 0.3 0.4 0.6 0.8 0.9 1. ], test: [0.5 0.7] train: [0.2 0.3 0.5 0.6 0.7 0.8 0.9 1. ], test: [0.1 0.4] train: [0.1 0.3 0.4 0.5 0.6 0.7 0.9 1. ], test: [0.2 0.8] train: [0.1 0.2 0.3 0.4 0.5 0.7 0.8 1. ], test: [0.6 0.9]

As we see, because of using a more realistic training and test scenario, k-fold cross validation technique gives us a much more stable performance measurement but, on the downside, it takes a lot of time when validating deep learning models.

CNTK does not support for k-cross validation, hence we need to write our own script to do so.

Detecting underfitting and overfitting

Whether, we use Hand-out dataset or k-fold cross-validation technique, we will discover that the output for the metrics will be different for dataset used for training and the dataset used for validation.

Detecting overfitting

The phenomenon called overfitting is a situation where our ML model, models the training data exceptionally well, but fails to perform well on the testing data, i.e. was not able to predict test data.

It happens when a ML model learns a specific pattern and noise from the training data to such an extent, that it negatively impacts that model’s ability to generalise from the training data to new, i.e. unseen data. Here, noise is the irrelevant information or randomness in a dataset.

Following are the two ways with the help of which we can detect weather our model is overfit or not −

-

The overfit model will perform well on the same samples we used for training, but it will perform very bad on the new samples, i.e. samples different from training.

-

The model is overfit during validation if the metric on the test set is lower than the same metric, we use on our training set.

Detecting underfitting

Another situation that can arise in our ML is underfitting. This is a situation where, our ML model didn’t model the training data well and fails to predict useful output. When we start training the first epoch, our model will be underfitting, but will become less underfit as training progress.

One of the ways to detect, whether our model is underfit or not is to look at the metrics for training set and test set. Our model will be underfit if the metric on the test set is higher than the metric on the training set.

CNTK – Neural Network Classification

In this chapter, we will study how to classify neural network by using CNTK.

Introduction

Classification may be defined as the process to predict categorial output labels or responses for the given input data. The categorised output, which will be based on what the model has learned in training phase, can have the form such as “Black” or “White” or “spam” or “no spam”.

On the other hand, mathematically, it is the task of approximating a mapping function say f from input variables say X to the output variables say Y.

A classic example of classification problem can be the spam detection in e-mails. It is obvious that there can be only two categories of output, “spam” and “no spam”.

To implement such classification, we first need to do training of the classifier where “spam” and “no spam” emails would be used as the training data. Once, the classifier trained successfully, it can be used to detect an unknown email.

Here, we are going to create a 4-5-3 NN using iris flower dataset having the following −

-

4-input nodes (one for each predictor value).

-

5-hidden processing nodes.

-

3-output nodes (because there are three possible species in iris dataset).

Loading Dataset

We will be using iris flower dataset, from which we want to classify species of iris flowers based on the physical properties of sepal width and length, and petal width and length. The dataset describes the physical properties of different varieties of iris flowers −

-

Sepal length

-

Sepal width

-

Petal length

-

Petal width

-

Class i.e. iris setosa or iris versicolor or iris virginica

We have iris.CSV file which we used before in previous chapters also. It can be loaded with the help of Pandas library. But, before using it or loading it for our classifier, we need to prepare the training and test files, so that it can be used easily with CNTK.

Preparing training & test files

Iris dataset is one of the most popular datasets for ML projects. It has 150 data items and the raw data looks as follows −

5.1 3.5 1.4 0.2 setosa 4.9 3.0 1.4 0.2 setosa … 7.0 3.2 4.7 1.4 versicolor 6.4 3.2 4.5 1.5 versicolor … 6.3 3.3 6.0 2.5 virginica 5.8 2.7 5.1 1.9 virginica

As told earlier, the first four values on each line describes the physical properties of different varieties, i.e. Sepal length, Sepal width, Petal length, Petal width of iris flowers.

But, we should have to convert the data in the format, that can be easily used by CNTK and that format is .ctf file (we created one iris.ctf in previous section also). It will look like as follows −

|attribs 5.1 3.5 1.4 0.2|species 1 0 0 |attribs 4.9 3.0 1.4 0.2|species 1 0 0 … |attribs 7.0 3.2 4.7 1.4|species 0 1 0 |attribs 6.4 3.2 4.5 1.5|species 0 1 0 … |attribs 6.3 3.3 6.0 2.5|species 0 0 1 |attribs 5.8 2.7 5.1 1.9|species 0 0 1

In the above data, the |attribs tag mark the start of the feature value and the |species tags the class label values. We can also use any other tag names of our wish, even we can add item ID as well. For example, look at the following data −

|ID 001 |attribs 5.1 3.5 1.4 0.2|species 1 0 0 |#setosa |ID 002 |attribs 4.9 3.0 1.4 0.2|species 1 0 0 |#setosa … |ID 051 |attribs 7.0 3.2 4.7 1.4|species 0 1 0 |#versicolor |ID 052 |attribs 6.4 3.2 4.5 1.5|species 0 1 0 |#versicolor …

There are total 150 data items in iris dataset and for this example, we will be using 80-20 hold-out dataset rule i.e. 80% (120 items) data items for training purpose and remaining 20% (30 items) data items for testing purpose.

Constructing Classification model

First, we need to process the data files in CNTK format and for that we are going to use the helper function named create_reader as follows −

def create_reader(path, input_dim, output_dim, rnd_order, sweeps): x_strm = C.io.StreamDef(field=''attribs'', shape=input_dim, is_sparse=False) y_strm = C.io.StreamDef(field=''species'', shape=output_dim, is_sparse=False) streams = C.io.StreamDefs(x_src=x_strm, y_src=y_strm) deserial = C.io.CTFDeserializer(path, streams) mb_src = C.io.MinibatchSource(deserial, randomize=rnd_order, max_sweeps=sweeps) return mb_src

Now, we need to set the architecture arguments for our NN and also provide the location of the data files. It can be done with the help of following python code −

def main():