With the help of input embeddings, transformers get vector representations of discrete tokens like words, sub-words, or characters. However, these vector representations do not provide information about the position of these tokens within the sequence. That’s the reason a critical component named “positional encoding” is used in the architecture of the Transformer just after the input embedding sub-layer.

Positional encoding enables the model to understand the sequence order by providing each token in the input sequence with information about its position. In this chapter, we will understand what positional encoding is, why we need it, its working and its implementation in Python programming language.

What is Positional Encoding?

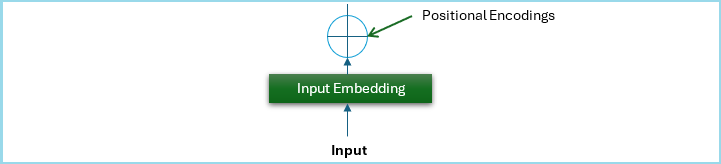

Positional Encoding is a mechanism used in Transformer to provide information about the order of tokens within an input sequence. In the Transformer architecture, positional encoding component is added after the input embedding sub-layer.

Take a look at the following diagram; it is a part of the original transformer architecture, representing the structure of positional encoding component −

Why Do We Need Positional Encoding in the Transformer Model?

The Transformer, despite having powerful self-attention mechanism, lacks an inherent sense of order. Unlike Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTMs) that process a sequence in a specific order, the Transformer’s parallel processing does not provide information about the position of the tokens within the input sequence. Due to this, the model cannot understand the context, particularly in the tasks where the order of words is important.

To overcome this limitation, positional encoding is introduced that gives each token in the input sequence information about its position. These encodings are then added to the input embeddings which ensures that the Transformer processes the tokens along with their positional context.

How Positional Encoding Works?

We have discussed in previous chapter that Transformer expects a fixed size dimensional space (it may be dmodel = 512 or any other constant value) for each vector representation of the output of the positional encoding function.

As an example, let’s see the sentence given below −

I am playing with the brown ball and my brother is playing with the red ball.

The words “brown” and “red” may be similar but in this sentence, they are far apart. The word “brown” is in position 6 (pos = 6) and the word “red” is in position 15 (pos = 15).

Here, the problem is that we need to find a way to add a value to the word embeddings of each word in the input sentence so that it has the information about its sequence. However, for each word embedding, we need to find a way to provide information in the range of (0, 512).

Positional encoding can be achieved in many ways, but Vashwani et. al. (2017) in the original Transformer model used a specific method based on sinusoidal functions to generate a unique position encoding for each position in the sequence.

The equations below show how the positional encoding for a given position pos and dimension i can be defined −

$$\mathrm{PE_{pos \: 2i} \: = \: sin\left(\frac{pos}{10000^{\frac{2i}{d_{model}}}}\right)}$$

$$\mathrm{PE_{pos \: 2i+1} \: = \: cos\left(\frac{pos}{10000^{\frac{2i}{d_{model}}}}\right)}$$

Here, dmodel is the dimension of embeddings.

Creating Positional Encodings Using Sinusoidal Functions

Given below is a Python script to create positional encodings using sinusoidal functions −

def positional_encoding(max_len, d_model):

pe = np.zeros((max_len, d_model))

position = np.arange(0, max_len).reshape(-1, 1)

div_term = np.exp(np.arange(0, d_model, 2) * -(np.log(10000.0) / d_model))

pe[:, 0::2] = np.sin(position * div_term)

pe[:, 1::2] = np.cos(position * div_term)

return pe

# Parameters

max_len = len(tokens)

# Generate positional encodings

pos_encodings = positional_encoding(max_len, embed_dim)

# Adjust the length of the positional encodings to match the input

input_embeddings_with_pos = input_embeddings + pos_encodings[:len(tokens)]

print("Positional Encodings:n", pos_encodings)

print("Input Embeddings with Positional Encoding:n", input_embeddings_with_pos)

Now, let’s see how we can add them to the input embeddings we have implemented in the previous chapter −

import numpy as np

# Example text and tokenization

text = "Transformers revolutionized the field of NLP"

tokens = text.split()

# Creating a vocabulary

vocab = {word: idx for idx, word in enumerate(tokens)}

# Example input (sequence of token indices)

input_indices = np.array([vocab[word] for word in tokens])

print("Vocabulary:", vocab)

print("Input Indices:", input_indices)

# Parameters

vocab_size = len(vocab)

embed_dim = 512 # Dimension of the embeddings

# Initialize the embedding matrix with random values

embedding_matrix = np.random.rand(vocab_size, embed_dim)

# Get the embeddings for the input indices

input_embeddings = embedding_matrix[input_indices]

print("Embedding Matrix:n", embedding_matrix)

print("Input Embeddings:n", input_embeddings)

def positional_encoding(max_len, d_model):

pe = np.zeros((max_len, d_model))

position = np.arange(0, max_len).reshape(-1, 1)

div_term = np.exp(np.arange(0, d_model, 2) * -(np.log(10000.0) / d_model))

pe[:, 0::2] = np.sin(position * div_term)

pe[:, 1::2] = np.cos(position * div_term)

return pe

# Parameters

max_len = len(tokens)

# Generate positional encodings

pos_encodings = positional_encoding(max_len, embed_dim)

# Adjust the length of the positional encodings to match the input

input_embeddings_with_pos = input_embeddings + pos_encodings[:len(tokens)]

print("Positional Encodings:n", pos_encodings)

print("Input Embeddings with Positional Encoding:n", input_embeddings_with_pos)

Output

After running the above script, we will get the following output −

Vocabulary: {''Transformers'': 0, ''revolutionized'': 1, ''the'': 2, ''field'': 3, ''of'': 4, ''NLP'': 5}

Input Indices: [0 1 2 3 4 5]

Embedding Matrix:

[[0.71034683 0.08027048 0.89859858 ... 0.48071898 0.76495253 0.53869711]

[0.71247114 0.33418585 0.15329225 ... 0.61768814 0.32710687 0.89633072]

[0.11731439 0.97467007 0.66899319 ... 0.76157481 0.41975638 0.90980636]

[0.42299987 0.51534082 0.6459627 ... 0.58178494 0.13362482 0.13826352]

[0.2734792 0.80146145 0.75947837 ... 0.15180679 0.93250566 0.43946461]

[0.5750698 0.49106984 0.56273384 ... 0.77180581 0.18834177 0.6658962 ]]

Input Embeddings:

[[0.71034683 0.08027048 0.89859858 ... 0.48071898 0.76495253 0.53869711]

[0.71247114 0.33418585 0.15329225 ... 0.61768814 0.32710687 0.89633072]

[0.11731439 0.97467007 0.66899319 ... 0.76157481 0.41975638 0.90980636]

[0.42299987 0.51534082 0.6459627 ... 0.58178494 0.13362482 0.13826352]

[0.2734792 0.80146145 0.75947837 ... 0.15180679 0.93250566 0.43946461]

[0.5750698 0.49106984 0.56273384 ... 0.77180581 0.18834177 0.6658962 ]]

Positional Encodings:

[[ 0.00000000e+00 1.00000000e+00 0.00000000e+00 ... 1.00000000e+00

0.00000000e+00 1.00000000e+00]

[ 8.41470985e-01 5.40302306e-01 8.21856190e-01 ... 9.99999994e-01

1.03663293e-04 9.99999995e-01]

[ 9.09297427e-01 -4.16146837e-01 9.36414739e-01 ... 9.99999977e-01

2.07326584e-04 9.99999979e-01]

[ 1.41120008e-01 -9.89992497e-01 2.45085415e-01 ... 9.99999948e-01

3.10989874e-04 9.99999952e-01]

[-7.56802495e-01 -6.53643621e-01 -6.57166863e-01 ... 9.99999908e-01

4.14653159e-04 9.99999914e-01]

[-9.58924275e-01 2.83662185e-01 -9.93854779e-01 ... 9.99999856e-01

5.18316441e-04 9.99999866e-01]]

Input Embeddings with Positional Encoding:

[[0.71034683 1.08027048 0.89859858 ... 1.48071898 0.76495253 1.53869711]

[1.55394213 0.87448815 0.97514844 ... 1.61768813 0.32721053 1.89633072]

[1.02661182 0.55852323 1.60540793 ... 1.76157479 0.4199637 1.90980634]

[0.56411987 -0.47465167 0.89104811 ... 1.58178489 0.13393581 1.13826347]

[-0.4833233 0.14781783 0.1023115 ... 1.15180669 0.93292031 1.43946452]

[-0.38385447 0.77473203 -0.43112094 ... 1.77180567 0.18886009 1.66589607]]

Conclusion

In this chapter, we covered the basics of positional encoding, its necessity, working, Python implementation, and integration within the Transformer model. Positional encoding is a fundamental component of the Transformer architecture that enables the model to capture the order of tokens in a sequence.

Understanding and implementing the concept of positional encoding is important for using the full potential of the Transformer model and applying them effectively to solve complex NLP problems.